Example-01: Derivative

[1]:

# Given an input function, its higher order (partial) derivatives with respect to one or sevaral tensor arguments can be computed using forward or reverse mode automatic differentiation

# Derivative orders can be different for each tensor argument

# Input function is expected to return a tensor or a (nested) list of tensors

# Derivatives are computed by nesting torch jacobian functions

# For higher order derivatives, nesting results in exponentially growing redundant computations

# Note, forward mode is more memory efficient in this case

# If the input function returns a tensor, the output is referred as derivative table representation

# This representation can be evaluated near given evaluation point (at a given deviation) if the input function returns a scalar or a vector

# Table representation is a (nested) list of tensors, it can be used as a redundant function representation near given evaluation point (taylor series)

# Table structure for f(x), f(x, y) and f(x, y, z) is shown bellow (similar structure holds for a function with more aruments)

# f(x)

# t(f, x)

# [f, Dx f, Dxx f, ...]

# f(x, y)

# t(f, x, y)

# [

# [ f, Dy f, Dyy f, ...],

# [ Dx f, Dx Dy f, Dx Dyy f, ...],

# [Dxx f, Dxx Dy f, Dxx Dyy f, ...],

# ...

# ]

# f(x, y, z)

# t(f, x, y, z)

# [

# [

# [ f, Dz f, Dzz f, ...],

# [ Dy f, Dy Dz f, Dy Dzz f, ...],

# [ Dyy f, Dyy Dz f, Dyy Dzz f, ...],

# ...

# ],

# [

# [ Dx f, Dx Dz f, Dx Dzz f, ...],

# [ Dx Dy f, Dx Dy Dz f, Dx Dy Dzz f, ...],

# [ Dx Dyy f, Dx Dyy Dz f, Dx Dyy Dzz f, ...],

# ...

# ],

# [

# [ Dxx f, Dxx Dz f, Dxx Dzz f, ...],

# [ Dxx Dy f, Dxx Dy Dz f, Dxx Dy Dzz f, ...],

# [Dxx Dyy f, Dxx Dyy Dz f, Dxx Dyy Dzz f, ...],

# ...

# ],

# ...

# ]

[2]:

# Import

import torch

from ndmap.derivative import derivative

from ndmap.evaluate import evaluate

from ndmap.series import series

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Basic derivative interface

# derivative(

# order:int, # derivative order

# function:Callable, # input function

# *args, # function(*args) = function(x:Tensor, ...)

# intermediate:bool = True, # flag to return all intermediate derivatives

# jacobian:Callable = torch.func.jacfwd # torch.func.jacfwd or torch.func.jacfrev

# )

# derivative(

# order:tuple[int, ...], # derivative orders

# function:Callable, # input function

# *args, # function(*args) = function(x:Tensor, y:Tensor, z:Tensor, ...)

# intermediate:bool = True, # flag to return all intermediate derivatives

# jacobian:Callable = torch.func.jacfwd # torch.func.jacfwd or torch.func.jacfrev

# )

[5]:

# Derivative

# Input: scalar

# Output: scalar

# Set test function

# Note, the first function argument is a scalar tensor

# Input function can have other additional arguments

# Other arguments are not used in computation of derivatives

def fn(x, a, b, c, d, e, f):

return a + b*x + c*x**2 + d*x**3 + e*x**4 + f*x**5

# Set derivative order

n = 5

# Set evaluation point

x = torch.tensor(0.0, dtype=dtype, device=device)

# Set fixed parameters

a, b, c, d, e, f = torch.tensor([1.0, 1.0, 1.0, 1.0, 1.0, 1.0], dtype=dtype, device=device)

# Compute n'th derivative

value = derivative(n, fn, x, a, b, c, d, e, f, intermediate=False, jacobian=torch.func.jacfwd)

print(value.cpu().numpy().tolist())

# Compute all derivatives upto given order

# Note, function value itself is referred as zero order derivative

# Since function returns a tensor, output is a list of tensors

values = derivative(n, fn, x, a, b, c, d, e, f, intermediate=True, jacobian=torch.func.jacfwd)

print(*[value.cpu().numpy().tolist() for value in values], sep=', ')

# Note, intermediate flag (default=True) can be used to return all derivatives

# For jacobian parameter, torch.func.jacfwd or torch.func.jacrev functions can be passed

# Evaluate derivative table representation for a given deviation from the evaluation point

dx = torch.tensor(1.0, dtype=dtype, device=device)

print(evaluate(derivative(n, fn, x, a, b, c, d, e, f) , [dx]).cpu().numpy().tolist())

print(fn(x + dx, a, b, c, d, e, f).cpu().numpy().tolist())

120.0

1.0, 1.0, 2.0, 6.0, 24.0, 120.0

6.0

6.0

[6]:

# Derivative

# Input: vector

# Output: scalar

# Set test function

# Note, the first function argument is a vector tensor

# Input function can have other additional arguments

# Other arguments are not used in computation of derivatives

def fn(x, a, b, c):

x1, x2 = x

return a + b*(x1 - 1)**2 + c*(x2 + 1)**2

# Set derivative order

n = 2

# Set evaluation point

x = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

# Set fixed parameters

a, b, c = torch.tensor([1.0, 1.0, 1.0], dtype=dtype, device=device)

# Compute only n'th derivative

# Note, for given input & output the result is a hessian

value = derivative(n, fn, x, a, b, c, intermediate=False, jacobian=torch.func.jacfwd)

print(value.cpu().numpy().tolist())

# Compute all derivatives upto given order

# Note, fuction value itself is referred as zero order derivative

# Output is a list of tensors (value, jacobian, hessian, ...)

values = derivative(n, fn, x, a, b, c, intermediate=True, jacobian=torch.func.jacfwd)

print(*[value.cpu().numpy().tolist() for value in values], sep=', ')

# Compute jacobian and hessian with torch

print(fn(x, a, b, c).cpu().numpy().tolist(),

torch.func.jacfwd(lambda x: fn(x, a, b, c))(x).cpu().numpy().tolist(),

torch.func.hessian(lambda x: fn(x, a, b, c))(x).cpu().numpy().tolist(),

sep=', ')

# Evaluate derivative table representation for a given deviation from the evaluation point

dx = torch.tensor([+1.0, -1.0], dtype=dtype, device=device)

print(evaluate(values, [dx]).cpu().numpy())

print(fn(x + dx, a, b, c).cpu().numpy())

# Evaluate can be mapped over a set of deviation values

print(torch.func.vmap(lambda x: evaluate(values, [x]))(torch.stack(5*[dx])).cpu().numpy().tolist())

# Derivative can be mapped over a set of evaluation points

# Note, the inputt function is expeted to return a tensor

print(torch.func.vmap(lambda x: derivative(1, fn, x, a, b, c, intermediate=False))(torch.stack(5*[x])).cpu().numpy().tolist())

[[2.0, 0.0], [0.0, 2.0]]

3.0, [-2.0, 2.0], [[2.0, 0.0], [0.0, 2.0]]

3.0, [-2.0, 2.0], [[2.0, 0.0], [0.0, 2.0]]

1.0

1.0

[1.0, 1.0, 1.0, 1.0, 1.0]

[[-2.0, 2.0], [-2.0, 2.0], [-2.0, 2.0], [-2.0, 2.0], [-2.0, 2.0]]

[7]:

# Derivative

# Input: vector

# Output: vector

# Set test function

# Note, the first function argument is a vector tensor

# Input function can have other additional arguments

# Other arguments (if any) are not used in computation of derivatives

def fn(x):

x1, x2 = x

X1 = 1.0*x1 + 2.0*x2

X2 = 3.0*x1 + 4.0*x2

X3 = 5.0*x1 + 6.0*x2

return torch.stack([X1, X2, X3])

# Set derivative order

n = 1

# Set evaluation point

x = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

# Compute derivatives

values = derivative(n, fn, x)

print(*[value.cpu().numpy().tolist() for value in values], sep=', ')

print()

# Evaluate derivative table representation for a given deviation from the evaluation point

dx = torch.tensor([+1, -1], dtype=dtype, device=device)

print(evaluate(values, [dx]).cpu().numpy().tolist())

print(fn(x + dx).cpu().numpy().tolist())

[0.0, 0.0, 0.0], [[1.0, 2.0], [3.0, 4.0], [5.0, 6.0]]

[-1.0, -1.0, -1.0]

[-1.0, -1.0, -1.0]

[8]:

# Derivative

# Input: tensor

# Output: tensor

# Set test function

def fn(x):

return 1 + x + x**2 + x**3

# Set derivative order

n = 3

# Set evaluation point

x = torch.zeros((1, 2, 3), dtype=dtype, device=device)

# Compute derivatives

# Note, output is a list of tensors

values = derivative(n, fn, x)

print(*[list(value.shape) for value in values], sep='\n')

# Evaluate derivative table representation for a given deviation from the evaluation point

# Note, evaluate function works with scalar or vector tensor input

# One should compute derivatives of a wrapped function and reshape the result of evaluate

# Set wrapped function

def gn(x, shape):

return fn(x.reshape(shape)).flatten()

print(fn(x).cpu().numpy().tolist())

print(gn(x.flatten(), x.shape).reshape(x.shape).cpu().numpy().tolist())

# Compute derivatives

values = derivative(n, gn, x.flatten(), x.shape)

# Set deviation value

dx = torch.ones_like(x)

# Evaluate

print(evaluate(values, [dx.flatten()]).reshape(x.shape).cpu().numpy().tolist())

print(gn((x + dx).flatten(), x.shape).reshape(x.shape).cpu().numpy().tolist())

print(fn(x + dx).cpu().numpy().tolist())

[1, 2, 3]

[1, 2, 3, 1, 2, 3]

[1, 2, 3, 1, 2, 3, 1, 2, 3]

[1, 2, 3, 1, 2, 3, 1, 2, 3, 1, 2, 3]

[[[1.0, 1.0, 1.0], [1.0, 1.0, 1.0]]]

[[[1.0, 1.0, 1.0], [1.0, 1.0, 1.0]]]

[[[4.0, 4.0, 4.0], [4.0, 4.0, 4.0]]]

[[[4.0, 4.0, 4.0], [4.0, 4.0, 4.0]]]

[[[4.0, 4.0, 4.0], [4.0, 4.0, 4.0]]]

[9]:

# Derivative

# Input: vector

# Output: nested list of tensors

# Set test function

def fn(x):

x1, x2, x3, x4, x5, x6 = x

X1 = 1.0*x1 + 2.0*x2 + 3.0*x3

X2 = 4.0*x4 + 5.0*x5 + 6.0*x6

return [torch.stack([X1]), [torch.stack([X2])]]

# Set derivative order

n = 1

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

# Compute derivatives

values = derivative(n, fn, x, intermediate=False)

[10]:

# Derivative

# Input: vector, vector, vector

# Output: vector

# Set test function

def fn(x, y, z):

x1, x2 = x

y1, y2 = y

z1, z2 = z

return torch.stack([(x1 + x2)*(y1 + y2)*(z1 + z2)])

# Set derivative orders for x, y and z

nx, ny, nz = 1, 1, 1

# Set evaluation point

# Note, evaluation point is a list of tensors

x = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

y = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

z = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

# Compute n'th derivativ

value = derivative((nx, ny, nz), fn, x, y, z, intermediate=False)

print(value.cpu().numpy().tolist())

# Compute all derivatives upto given order

values = derivative((nx, ny, nz), fn, x, y, z, intermediate=True)

# Evaluate derivative table representation for a given deviation from the evaluation point

dx = torch.tensor([1.0, 1.0], dtype=dtype, device=device)

dy = torch.tensor([1.0, 1.0], dtype=dtype, device=device)

dz = torch.tensor([1.0, 1.0], dtype=dtype, device=device)

print(evaluate(values, [dx, dy, dz]).cpu().numpy().tolist())

print(fn(x + dx, y + dy, z + dz).cpu().numpy().tolist())

# Note, if the input function has vector arguments and returns a tensor, it can be repsented with series

for key, value in series(tuple(map(len, (x, y, z))), (nx, ny, nz), values).items():

print(f'{key}: {value.cpu().numpy().tolist()}')

[[[[1.0, 1.0], [1.0, 1.0]], [[1.0, 1.0], [1.0, 1.0]]]]

[8.0]

[8.0]

(0, 0, 0, 0, 0, 0): [0.0]

(0, 0, 0, 0, 1, 0): [0.0]

(0, 0, 0, 0, 0, 1): [0.0]

(0, 0, 1, 0, 0, 0): [0.0]

(0, 0, 0, 1, 0, 0): [0.0]

(0, 0, 1, 0, 1, 0): [0.0]

(0, 0, 1, 0, 0, 1): [0.0]

(0, 0, 0, 1, 1, 0): [0.0]

(0, 0, 0, 1, 0, 1): [0.0]

(1, 0, 0, 0, 0, 0): [0.0]

(0, 1, 0, 0, 0, 0): [0.0]

(1, 0, 0, 0, 1, 0): [0.0]

(1, 0, 0, 0, 0, 1): [0.0]

(0, 1, 0, 0, 1, 0): [0.0]

(0, 1, 0, 0, 0, 1): [0.0]

(1, 0, 1, 0, 0, 0): [0.0]

(1, 0, 0, 1, 0, 0): [0.0]

(0, 1, 1, 0, 0, 0): [0.0]

(0, 1, 0, 1, 0, 0): [0.0]

(1, 0, 1, 0, 1, 0): [1.0]

(1, 0, 1, 0, 0, 1): [1.0]

(1, 0, 0, 1, 1, 0): [1.0]

(1, 0, 0, 1, 0, 1): [1.0]

(0, 1, 1, 0, 1, 0): [1.0]

(0, 1, 1, 0, 0, 1): [1.0]

(0, 1, 0, 1, 1, 0): [1.0]

(0, 1, 0, 1, 0, 1): [1.0]

[11]:

# Redundancy free computation

# Set test function

def fn(x):

x1, x2 = x

return torch.stack([1.0*x1 + 2.0*x2 + 3.0*x1**2 + 4.0*x1*x2 + 5.0*x2**2])

# Set derivative order

n = 2

# Set evaluation point

x = torch.tensor([0.0, 0.0], dtype=dtype, device=device)

# Compute n'th derivative

value = derivative(n, fn, x, intermediate=False)

print(value.cpu().numpy().tolist())

# Since derivatives are computed by nesting of jacobian function, redundant computations appear starting from the second order

# Redundant computations can be avoided if all input arguments are scalar tensors

def gn(x1, x2):

return fn(torch.stack([x1, x2]))

print(derivative((2, 0), gn, *x, intermediate=False).cpu().numpy().tolist())

print(derivative((1, 1), gn, *x, intermediate=False).cpu().numpy().tolist())

print(derivative((0, 2), gn, *x, intermediate=False).cpu().numpy().tolist())

[[[6.0, 4.0], [4.0, 10.0]]]

[6.0]

[4.0]

[10.0]

Example-02: Derivative table representation

[1]:

# Input function f: R^n x R^m x ... -> R^n is referred as a mapping

# The first function argument is state, other arguments (used in computation of derivatives) and knobs

# State and all knobs are vector-like tensors

# Note, functions of this form can be used to model tranformations throught accelerator magnets

# In this case, derivatives can be used to generate a (parametric) model of the input function

# Function model can be represented as a derivative table or coefficients of monomials (series representation)

# In this example, table representation is used to model transformation throught a sextupole accelerator magnet

# Table is computed with respect to state variables (phase space variables) and knobs (magnet strength and length)

[2]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.signature import signature

from ndmap.signature import get

from ndmap.index import index

from ndmap.index import reduce

from ndmap.index import build

from ndmap.series import series

from ndmap.evaluate import evaluate

from ndmap.evaluate import compare

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Mapping (sextupole accelerator magnet transformatijet)

# Given initial state, magnet strength and length, state is propagated using explicit symplectic integration

# Number of integration steps is set by count parameter, integration step length is length/count

def mapping(x, k, l, count=10):

(qx, px, qy, py), (k, ), (l, ) = x, k, l/(2.0*count)

for _ in range(count):

qx, qy = qx + l*px, qy + l*py

px, py = px - 2.0*l*k*(qx**2 - qy**2), py + 2.0*l*k*qx*qy

qx, qy = qx + l*px, qy + l*py

return torch.stack([qx, px, qy, py])

[5]:

# Table representation (state)

# Set evaluation point & parameters

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

k = torch.tensor([10.0], dtype=dtype, device=device)

l = torch.tensor([0.1], dtype=dtype, device=device)

# Compute derivatives (table representation)

# Since derivatives are computed only with respect to the state, output table is a list of tensors

t = derivative(6, mapping, x, k, l)

print(*[element.shape for element in t], sep='\n')

torch.Size([4])

torch.Size([4, 4])

torch.Size([4, 4, 4])

torch.Size([4, 4, 4, 4])

torch.Size([4, 4, 4, 4, 4])

torch.Size([4, 4, 4, 4, 4, 4])

torch.Size([4, 4, 4, 4, 4, 4, 4])

[6]:

# Compare table and exact mapping near the evaluation point (change order to observe convergence)

# Note, table transformation is not symplectic

dx = torch.tensor([0.0, 0.001, 0.0001, 0.0], dtype=dtype, device=device)

print(evaluate(t, [dx]).cpu().tolist())

print(mapping(x + dx, k, l).cpu().tolist())

[0.00010000041624970626, 0.0010000066749862018, 0.00010000016750044096, 5.000018166514047e-09]

[0.0001000004162497062, 0.0010000066749862018, 0.00010000016750044096, 5.000018166514046e-09]

[7]:

# Each bottom element (tensor) in the (flattend) derivative table is assosiated with a signature

# Signature is a tuple of derivative orders

print(signature(t))

[(0,), (1,), (2,), (3,), (4,), (5,), (6,)]

[8]:

# For a given signature, corresponding element can be extracted or changed with get/set functions

print(get(t, (1, )).cpu().numpy())

[[1. 0.1 0. 0. ]

[0. 1. 0. 0. ]

[0. 0. 1. 0.1]

[0. 0. 0. 1. ]]

[9]:

# Each bottom element is related to monomials

# For given order, monomial indices with repetitions can be computed

# These repetitions account for evaluation of the same partial derivatives with diffenent orders, e.g. df/dxdy vs df/dydx

print(index(4, 2))

[[2 0 0 0]

[1 1 0 0]

[1 0 1 0]

[1 0 0 1]

[1 1 0 0]

[0 2 0 0]

[0 1 1 0]

[0 1 0 1]

[1 0 1 0]

[0 1 1 0]

[0 0 2 0]

[0 0 1 1]

[1 0 0 1]

[0 1 0 1]

[0 0 1 1]

[0 0 0 2]]

[10]:

# Explicit evaluation

print(evaluate(t, [dx]).cpu().numpy())

print((t[0] + t[1] @ dx + 1/2 * t[2] @ dx @ dx + 1/2 * 1/3 * t[3] @ dx @ dx @ dx + 1/2 * 1/3 * 1/4 * t[4] @ dx @ dx @ dx @ dx + 1/2 * 1/3 * 1/4 * 1/5 * t[5] @ dx @ dx @ dx @ dx @ dx + 1/2 * 1/3 * 1/4 * 1/5 * 1/6 * t[6] @ dx @ dx @ dx @ dx @ dx @ dx).cpu().numpy())

print((t[0] + (t[1] + 1/2 * (t[2] + 1/3 * (t[3] + 1/4 * (t[4] + 1/5 * (t[5] + 1/6 * t[6] @ dx) @ dx) @ dx) @ dx) @ dx) @ dx).cpu().numpy())

[1.00000416e-04 1.00000667e-03 1.00000168e-04 5.00001817e-09]

[1.00000416e-04 1.00000667e-03 1.00000168e-04 5.00001817e-09]

[1.00000416e-04 1.00000667e-03 1.00000168e-04 5.00001817e-09]

[11]:

# Series representation can be generated from a given table

# This representation stores monomial powers and corresponding coefficients

s = series((4, ), (6, ), t)

print(torch.stack([s[(1, 0, 0, 0)], s[(0, 1, 0, 0)], s[(0, 0, 1, 0)], s[(0, 0, 0, 1)]]).cpu().numpy())

[[1. 0. 0. 0. ]

[0.1 1. 0. 0. ]

[0. 0. 1. 0. ]

[0. 0. 0.1 1. ]]

[12]:

# Evaluate series

print(evaluate(t, [dx]).cpu().numpy())

print(evaluate(s, [dx]).cpu().numpy())

[1.00000416e-04 1.00000667e-03 1.00000168e-04 5.00001817e-09]

[1.00000416e-04 1.00000667e-03 1.00000168e-04 5.00001817e-09]

[13]:

# Table representation (state & knobs)

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

k = torch.tensor([10.0], dtype=dtype, device=device)

l = torch.tensor([0.1], dtype=dtype, device=device)

# Compute derivatives (table representation)

# Since derivatives are computed with respect to state and knobs, output table is a nested list of tensors

t = derivative((6, 1, 1), mapping, x, k, l)

[14]:

# In this case, bottom table element signature is a tuple with several integers

print(get(t, (1, 0, 0)).cpu().numpy())

[[1. 0.1 0. 0. ]

[0. 1. 0. 0. ]

[0. 0. 1. 0.1]

[0. 0. 0. 1. ]]

[15]:

# Compare table and exact mapping near evaluation point (change order to observe convergence)

# Note, table transofrmation is not symplectic

dx = torch.tensor([0.0, 0.001, 0.0001, 0.0], dtype=dtype, device=device)

dk = torch.tensor([0.1], dtype=dtype, device=device)

dl = torch.tensor([0.001], dtype=dtype, device=device)

print(evaluate(t, [dx, 0.0*dk, 0.0*dl]).cpu().tolist())

print(evaluate(t, [dx, 1.0*dk, 1.0*dl]).cpu().tolist())

print(mapping(x + dx, k + dk, l + dl).cpu().tolist())

[0.00010000041624970626, 0.0010000066749862018, 0.00010000016750044096, 5.000018166514047e-09]

[0.0001010004271286862, 0.001000006741987918, 0.00010000017425071835, 5.1510191197368185e-09]

[0.00010100042712809422, 0.0010000067409770773, 0.00010000017430164039, 5.151524128394736e-09]

[16]:

# Each bottom element (tensor) in the (flattend) derivative table is assosiated with a signature

# Signature is a tuple of derivative orders

print(*[index for index in signature(t)], sep='\n')

(0, 0, 0)

(0, 0, 1)

(0, 1, 0)

(0, 1, 1)

(1, 0, 0)

(1, 0, 1)

(1, 1, 0)

(1, 1, 1)

(2, 0, 0)

(2, 0, 1)

(2, 1, 0)

(2, 1, 1)

(3, 0, 0)

(3, 0, 1)

(3, 1, 0)

(3, 1, 1)

(4, 0, 0)

(4, 0, 1)

(4, 1, 0)

(4, 1, 1)

(5, 0, 0)

(5, 0, 1)

(5, 1, 0)

(5, 1, 1)

(6, 0, 0)

(6, 0, 1)

(6, 1, 0)

(6, 1, 1)

[17]:

# Compute series

s = series((4, 1, 1), (6, 1, 1), t)

# Keys are generalized monomials

print(s[(1, 1, 1, 1, 1, 1)].cpu().numpy())

print()

# Evaluate series

print(evaluate(t, [dx, dk, dl]).cpu().numpy())

print(evaluate(s, [dx, dk, dl]).cpu().numpy())

print()

[1.447578e-06 9.756747e-05 0.000000e+00 0.000000e+00]

[1.01000427e-04 1.00000674e-03 1.00000174e-04 5.15101912e-09]

[1.01000427e-04 1.00000674e-03 1.00000174e-04 5.15101912e-09]

[18]:

# Reduced table representation

sequence, shape, unique = reduce((4, 1, 1), t)

out = derivative((6, 1, 1), lambda x, k, l: x, x, k, l)

build(out, sequence, shape, unique)

compare(t, out)

[18]:

True

Example-03: Derivative table propagation

[1]:

# Given a mapping f(state, *knobs, ...) and a derivative table t, derivatives of f(t, *knobs, ...) are computed

# This can be used to propagate derivative table throught a given mapping (computation of parametric fixed points and other applications)

[2]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.series import series

from ndmap.series import clean

from ndmap.evaluate import evaluate

from ndmap.propagate import identity

from ndmap.propagate import propagate

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Define mappings

def drif(x, w, l):

(qx, px, qy, py), (w, ), l = x, w, l

return torch.stack([qx + l*px/(1 + w), px, qy + l*py/(1 + w), py])

def quad(x, w, kq, l, n=100):

(qx, px, qy, py), (w, ), kq, l = x, w, kq, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 2.0*l*kq*qx, py + 2.0*l*kq*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def ring(x, w):

x = quad(x, w, +0.25, 0.5)

x = drif(x, w, 5.0)

x = quad(x, w, -0.20, 0.5)

x = quad(x, w, -0.20, 0.5)

x = drif(x, w, 5.0)

x = quad(x, w, +0.25, 0.5)

return x

[5]:

# Direct

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

w = torch.tensor([0.0], dtype=dtype, device=device)

# Compute derivatives

t = derivative((1, 4), ring, x, w)

# Evaluate for a given deviation

dx = torch.tensor([0.001, 0.0, 0.001, 0.0], dtype=dtype, device=device)

dw = torch.tensor([0.001], dtype=dtype, device=device)

print(ring(x + dx, w + dw).cpu().numpy())

print(evaluate(t, [dx, dw]).cpu().numpy())

[-8.88568650e-05 -5.43957672e-05 4.97569694e-04 -1.40349102e-04]

[-8.88568650e-05 -5.43957672e-05 4.97569694e-04 -1.40349102e-04]

[6]:

# Propagation

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

w = torch.tensor([0.0], dtype=dtype, device=device)

# Set identity table

t = identity((1, 4), [x, w])

# Propagate table

t = propagate((4, 1), (1, 4), t, [w], quad, +0.25, 0.5)

t = propagate((4, 1), (1, 4), t, [w], drif, 5.0)

t = propagate((4, 1), (1, 4), t, [w], quad, -0.20, 0.5)

t = propagate((4, 1), (1, 4), t, [w], quad, -0.20, 0.5)

t = propagate((4, 1), (1, 4), t, [w], drif, 5.0)

t = propagate((4, 1), (1, 4), t, [w], quad, +0.25, 0.5)

# Evaluate for a given deviation

dx = torch.tensor([0.001, 0.0, 0.001, 0.0], dtype=dtype, device=device)

dw = torch.tensor([0.001], dtype=dtype, device=device)

print(ring(x + dx, w + dw).cpu().numpy())

print(evaluate(t, [dx, dw]).cpu().numpy())

[-8.88568650e-05 -5.43957672e-05 4.97569694e-04 -1.40349102e-04]

[-8.88568650e-05 -5.43957672e-05 4.97569694e-04 -1.40349102e-04]

[7]:

# Series representation

s = clean(series((4, 1), (1, 4), t))

for key, value in s.items():

print(f'{key}: {value.cpu().numpy()}')

(1, 0, 0, 0, 0): [-0.09072843 -0.05430498 0. 0. ]

(0, 1, 0, 0, 0): [18.26293553 -0.09072843 0. 0. ]

(0, 0, 1, 0, 0): [ 0. 0. 0.49625858 -0.14063034]

(0, 0, 0, 1, 0): [0. 0. 5.35963583 0.49625858]

(1, 0, 0, 0, 1): [ 1.87420951 -0.09097396 0. 0. ]

(0, 1, 0, 0, 1): [-24.33227046 1.87420951 0. 0. ]

(0, 0, 1, 0, 1): [0. 0. 1.31324305 0.28161167]

(0, 0, 0, 1, 1): [0. 0. 1.46426265 1.31324305]

(1, 0, 0, 0, 2): [-2.65007558 0.18727838 0. 0. ]

(0, 1, 0, 0, 2): [30.20570906 -2.65007558 0. 0. ]

(0, 0, 1, 0, 2): [ 0. 0. -2.12812904 -0.37146989]

(0, 0, 0, 1, 2): [ 0. 0. -8.46895909 -2.12812904]

(1, 0, 0, 0, 3): [ 3.41796043 -0.28459189 0. 0. ]

(0, 1, 0, 0, 3): [-35.88102956 3.41796043 0. 0. ]

(0, 0, 1, 0, 3): [0. 0. 2.94802229 0.46018886]

(0, 0, 0, 1, 3): [ 0. 0. 15.6516443 2.94802229]

(1, 0, 0, 0, 4): [-4.17749922 0.38289808 0. 0. ]

(0, 1, 0, 0, 4): [41.3560843 -4.17749922 0. 0. ]

(0, 0, 1, 0, 4): [ 0. 0. -3.77254434 -0.54775243]

(0, 0, 0, 1, 4): [ 0. 0. -23.00943634 -3.77254434]

[8]:

# Check invariant

# Note, ring has two quadratic invariants (actions), zeros are padded to match state length

# Define invariant

matrix = torch.tensor([[4.282355639365032, 0.0, 0.0, 0.0], [0.0, 0.23351633638449415, 0.0, 0.0], [0.0, 0.0, 2.484643367729646, 0.0], [0.0, 0.0, 0.0, 0.40247224732044934]], dtype=dtype, device=device)

def invariant(x):

qx, px, qy, py = matrix.inverse() @ x

return torch.stack([0.5*(qx**2 + px**2), 0.5*(qy**2 + py**2), *torch.tensor(2*[0.0], dtype=dtype, device=device)])

# Set evaluation point

x = torch.tensor([0.001, 0.0, 0.001, 0.0], dtype=dtype, device=device)

w = torch.tensor([0.0], dtype=dtype, device=device)

# Evaluate invarint for a given state and transformed state

print(invariant(x).cpu().numpy())

print(invariant(ring(x, w)).cpu().numpy())

[2.72649397e-08 8.09919549e-08 0.00000000e+00 0.00000000e+00]

[2.72649397e-08 8.09919549e-08 0.00000000e+00 0.00000000e+00]

[9]:

# Invariant propagation

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

w = torch.tensor([0.0], dtype=dtype, device=device)

# Compute table and series representations of invariant

t = derivative((2, ), invariant, x)

s = series((4, ), (2, ), t)

print(*[f'{key}: {value.cpu().numpy()}' for key, value in clean(s, epsilon=1.0E-14).items()], sep='\n')

print()

# Compute table and series representations of transformed invariant

t = derivative((2, ), lambda x: invariant(ring(x, w)), x)

s = series((4, ), (2, ), t)

print(*[f'{key}: {value.cpu().numpy()}' for key, value in clean(s, epsilon=1.0E-14).items()], sep='\n')

print()

# Propagate invariant

t = derivative((2, ), ring, x, w)

t = propagate((4, ), (2, ), t, [], invariant)

s = series((4, ), (2, ), t)

print(*[f'{key}: {value.cpu().numpy()}' for key, value in clean(s, epsilon=1.0E-14).items()], sep='\n')

print()

(2, 0, 0, 0): [0.02726494 0. 0. 0. ]

(0, 2, 0, 0): [9.16928491 0. 0. 0. ]

(0, 0, 2, 0): [0. 0.08099195 0. 0. ]

(0, 0, 0, 2): [0. 3.08672633 0. 0. ]

(2, 0, 0, 0): [0.02726494 0. 0. 0. ]

(0, 2, 0, 0): [9.16928491 0. 0. 0. ]

(0, 0, 2, 0): [0. 0.08099195 0. 0. ]

(0, 0, 0, 2): [0. 3.08672633 0. 0. ]

(2, 0, 0, 0): [0.02726494 0. 0. 0. ]

(0, 2, 0, 0): [9.16928491 0. 0. 0. ]

(0, 0, 2, 0): [0. 0.08099195 0. 0. ]

(0, 0, 0, 2): [0. 3.08672633 0. 0. ]

Example-04: Jet class

[1]:

# Jet is a convenience class to work with jets (evaluation point & derivative table)

[2]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.evaluate import evaluate

from ndmap.jet import Jet

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Define mappings

def drif(x, w, l):

(qx, px, qy, py), (w, ), l = x, w, l

return torch.stack([qx + l*px/(1 + w), px, qy + l*py/(1 + w), py])

def quad(x, w, kq, l, n=100):

(qx, px, qy, py), (w, ), kq, l = x, w, kq, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 2.0*l*kq*qx, py + 2.0*l*kq*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

w = torch.tensor([0.0], dtype=dtype, device=device)

# Compute table representation

t = derivative((1, 4), lambda x, w: quad(drif(x, w, 1.0), w, 1.0, 1.0, 1), x, w)

[5]:

# Set jet

j = Jet((4, 1), (1, 4), point=[x, w], dtype=dtype, device=device)

j = j.propagate(drif, 1.0)

j = j.propagate(quad, 1.0, 1.0, 1)

[6]:

# Evaluate at given deviation

dx = torch.tensor([0.001, 0.0, 0.001, 0.0], dtype=dtype, device=device)

dw = torch.tensor([0.001], dtype=dtype, device=device)

print(evaluate(t, [dx, dw]).cpu().numpy())

print(j([dx, dw]).cpu().numpy())

[ 0.0005005 -0.001 0.0014995 0.001 ]

[ 0.0005005 -0.001 0.0014995 0.001 ]

[7]:

# Composition

j1 = Jet.from_mapping((4, 1), (1, 4), [x, w], drif, 1.0, dtype=dtype, device=device)

j2 = Jet.from_mapping((4, 1), (1, 4), [x, w], quad, 1.0, 1.0, 1, dtype=dtype, device=device)

print((j1 @ j2)([dx, dw]).cpu().numpy())

[ 0.0005005 -0.001 0.0014995 0.001 ]

Example-05: Nonlinear mapping approximation

[1]:

# Composition of several nonlinear mappings can be approximated by its table representation

[2]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.series import series

from ndmap.series import clean

from ndmap.evaluate import evaluate

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set test mapping

# Rotation with two sextupoles separated by negative identity linear transformation

# Note, result is expected to have zero degree two coefficients due to negative identity linear transformation between sextupoles

def spin(x, mux, muy):

(qx, px, qy, py), mux, muy = x, mux, muy

return torch.stack([qx*mux.cos() + px*mux.sin(), px*mux.cos() - qx*mux.sin(), qy*muy.cos() + py*muy.sin(), py*muy.cos() - qy*muy.sin()])

def drif(x, l):

(qx, px, qy, py), l = x, l

return torch.stack([qx + l*px, px, qy + l*py, py])

def sext(x, ks, l, n=1):

(qx, px, qy, py), ks, l = x, ks, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px, qy + l*py

px, py = px - 1.0*l*ks*(qx**2 - qy**2), py + 2.0*l*ks*qx*qy

qx, qy = qx + l*px, qy + l*py

return torch.stack([qx, px, qy, py])

def ring(x):

mux, muy = 2.0*numpy.pi*torch.tensor([1/3 + 0.01, 1/4 + 0.01], dtype=dtype, device=device)

x = spin(x, mux, muy)

x = drif(x, -0.05)

x = sext(x, 10.0, 0.1, 100)

x = drif(x, -0.05)

mux, muy = 2.0*numpy.pi*torch.tensor([0.50, 0.50], dtype=dtype, device=device)

x = spin(x, mux, muy)

x = drif(x, -0.05)

x = sext(x, 10.0, 0.1, 100)

x = drif(x, -0.05)

return x

[5]:

# Set evaluation point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

# Compute derivative table

n = 4

t = derivative(n, ring, x)

# Compute and print series

s = clean(series((4, ), (n, ), t), epsilon=1.0E-12)

print(*[f'{key}: {value.cpu().numpy()}' for key, value in clean(s, epsilon=1.0E-14).items()], sep='\n')

(1, 0, 0, 0): [0.55339155 0.83292124 0. 0. ]

(0, 1, 0, 0): [-0.83292124 0.55339155 0. 0. ]

(0, 0, 1, 0): [0. 0. 0.06279052 0.99802673]

(0, 0, 0, 1): [ 0. 0. -0.99802673 0.06279052]

(3, 0, 0, 0): [-7.53257307e-09 2.82424677e-03 0.00000000e+00 0.00000000e+00]

(2, 1, 0, 0): [-1.96250238e-08 -1.27525063e-02 0.00000000e+00 0.00000000e+00]

(2, 0, 1, 0): [ 0.00000000e+00 -0.00000000e+00 9.21186111e-06 3.34331441e-04]

(2, 0, 0, 1): [ 0.00000000e+00 -0.00000000e+00 1.59704766e-05 -5.06941449e-03]

(1, 2, 0, 0): [-1.11004920e-08 1.91940679e-02 0.00000000e+00 0.00000000e+00]

(1, 1, 1, 0): [ 0.00000000e+00 -0.00000000e+00 -2.98671134e-05 -9.79459005e-04]

(1, 1, 0, 1): [ 0.00000000e+00 -0.00000000e+00 -1.48185697e-05 1.53623878e-02]

(1, 0, 2, 0): [-1.05857397e-06 1.97282603e-05 0.00000000e+00 0.00000000e+00]

(1, 0, 1, 1): [ 1.48154798e-05 -1.18570682e-03 0.00000000e+00 0.00000000e+00]

(1, 0, 0, 2): [2.88067554e-05 9.18409783e-03 0.00000000e+00 0.00000000e+00]

(0, 3, 0, 0): [-9.21338044e-10 -9.62979589e-03 0.00000000e+00 0.00000000e+00]

(0, 2, 1, 0): [ 0.00000000e+00 -0.00000000e+00 2.40366928e-05 7.09979811e-04]

(0, 2, 0, 1): [ 0.00000000e+00 -0.00000000e+00 -1.38786549e-05 -1.15294375e-02]

(0, 1, 2, 0): [ 1.80396305e-06 -2.59196622e-05 0.00000000e+00 0.00000000e+00]

(0, 1, 1, 1): [-2.98509155e-05 1.72488752e-03 0.00000000e+00 0.00000000e+00]

(0, 1, 0, 2): [ 1.66318846e-05 -1.38269522e-02 0.00000000e+00 0.00000000e+00]

(0, 0, 3, 0): [ 0.00000000e+00 -0.00000000e+00 -1.47704279e-08 4.12534350e-06]

(0, 0, 2, 1): [ 0.00000000e+00 -0.00000000e+00 -3.31103168e-09 -1.96719688e-04]

(0, 0, 1, 2): [ 0.00000000e+00 -0.00000000e+00 3.93325786e-09 3.12683573e-03]

(0, 0, 0, 3): [ 0.00000000e+00 -0.00000000e+00 2.56887204e-10 -1.65665408e-02]

(4, 0, 0, 0): [ 6.48869023e-07 -3.91844462e-06 0.00000000e+00 0.00000000e+00]

(3, 1, 0, 0): [-3.91685700e-06 1.51001133e-05 0.00000000e+00 0.00000000e+00]

(3, 0, 1, 0): [ 0.00000000e+00 -0.00000000e+00 -4.36165465e-07 5.76316391e-06]

(3, 0, 0, 1): [ 0.00000000e+00 -0.00000000e+00 6.56410859e-06 1.31668166e-05]

(2, 2, 0, 0): [ 8.85501662e-06 -1.48880894e-05 0.00000000e+00 0.00000000e+00]

(2, 1, 1, 0): [ 0.00000000e+00 -0.00000000e+00 1.92232731e-06 -2.78183189e-05]

(2, 1, 0, 1): [ 0.00000000e+00 -0.00000000e+00 -2.96894787e-05 -3.16635607e-05]

(2, 0, 2, 0): [1.81838643e-08 2.18816315e-06 0.00000000e+00 0.00000000e+00]

(2, 0, 1, 1): [-9.93314202e-07 -3.25277215e-05 0.00000000e+00 0.00000000e+00]

(2, 0, 0, 2): [ 7.67316422e-06 -2.51871510e-05 0.00000000e+00 0.00000000e+00]

(1, 3, 0, 0): [-8.88618620e-06 -4.33921249e-06 0.00000000e+00 0.00000000e+00]

(1, 2, 1, 0): [ 0.00000000e+00 -0.00000000e+00 -2.81910856e-06 4.44963121e-05]

(1, 2, 0, 1): [ 0.00000000e+00 -0.00000000e+00 4.47107608e-05 6.22767407e-06]

(1, 1, 2, 0): [-5.02653030e-08 -6.69892371e-06 0.00000000e+00 0.00000000e+00]

(1, 1, 1, 1): [2.90625131e-06 1.04277202e-04 0.00000000e+00 0.00000000e+00]

(1, 1, 0, 2): [-2.28808176e-05 2.60346923e-05 0.00000000e+00 0.00000000e+00]

(1, 0, 3, 0): [ 0.00000000e+00 -0.00000000e+00 2.09236592e-09 3.51323891e-08]

(1, 0, 2, 1): [ 0.00000000e+00 -0.00000000e+00 -6.41657941e-09 -1.78350571e-06]

(1, 0, 1, 2): [ 0.00000000e+00 -0.00000000e+00 -6.10954936e-07 1.86435774e-05]

(1, 0, 0, 3): [ 0.00000000e+00 -0.00000000e+00 3.05503037e-06 -5.00150901e-05]

(0, 4, 0, 0): [3.33982092e-06 8.88114110e-06 0.00000000e+00 0.00000000e+00]

(0, 3, 1, 0): [ 0.00000000e+00 -0.00000000e+00 1.37553970e-06 -2.36217768e-05]

(0, 3, 0, 1): [ 0.00000000e+00 -0.00000000e+00 -2.24184174e-05 1.77513110e-05]

(0, 2, 2, 0): [3.54703897e-08 5.11986525e-06 0.00000000e+00 0.00000000e+00]

(0, 2, 1, 1): [-2.15414781e-06 -8.31702815e-05 0.00000000e+00 0.00000000e+00]

(0, 2, 0, 2): [1.72952174e-05 1.78791226e-05 0.00000000e+00 0.00000000e+00]

(0, 1, 3, 0): [ 0.00000000e+00 -0.00000000e+00 -3.32576455e-09 -2.16775859e-08]

(0, 1, 2, 1): [ 0.00000000e+00 -0.00000000e+00 1.73891518e-08 1.32003603e-06]

(0, 1, 1, 2): [ 0.00000000e+00 -0.00000000e+00 8.58583303e-07 -7.35197022e-06]

(0, 1, 0, 3): [ 0.00000000e+00 -0.00000000e+00 -4.60205741e-06 -3.44817289e-05]

(0, 0, 4, 0): [-2.95410616e-10 -3.91436795e-10 0.00000000e+00 0.00000000e+00]

(0, 0, 3, 1): [ 1.72261161e-08 -3.76703368e-08 0.00000000e+00 0.00000000e+00]

(0, 0, 2, 2): [-3.76632244e-07 1.04173914e-06 0.00000000e+00 0.00000000e+00]

(0, 0, 1, 3): [ 3.81179356e-06 -5.44741177e-06 0.00000000e+00 0.00000000e+00]

(0, 0, 0, 4): [-1.51552013e-05 -3.46854944e-07 0.00000000e+00 0.00000000e+00]

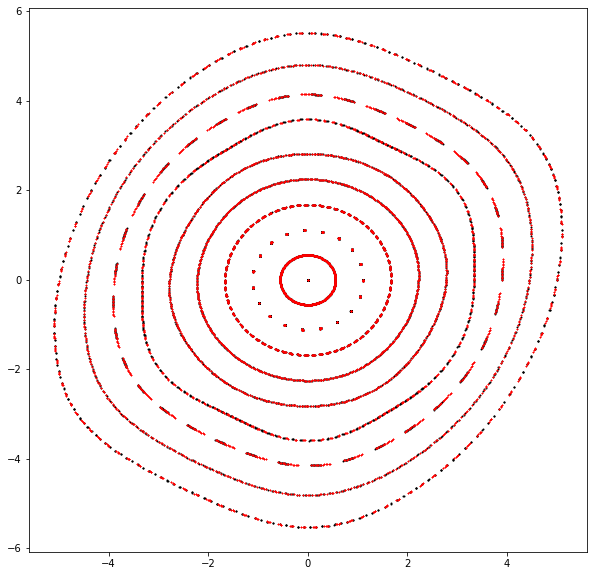

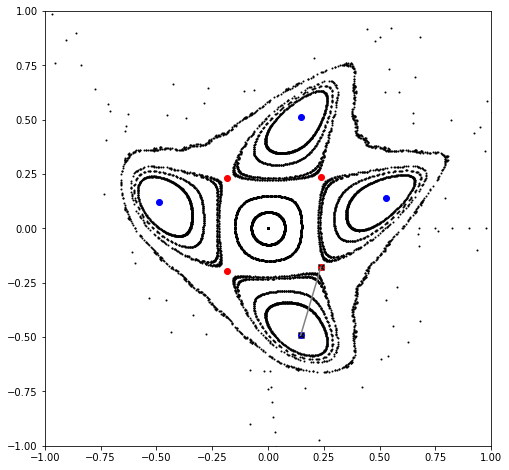

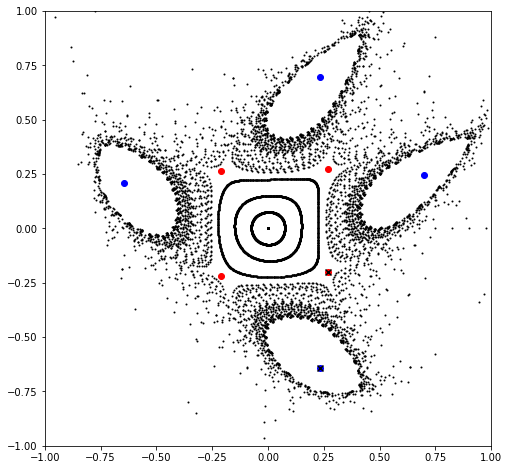

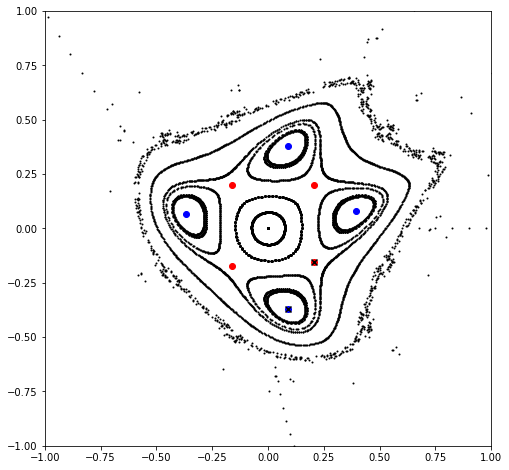

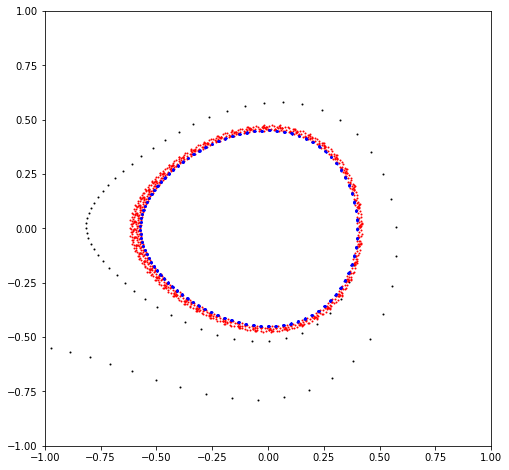

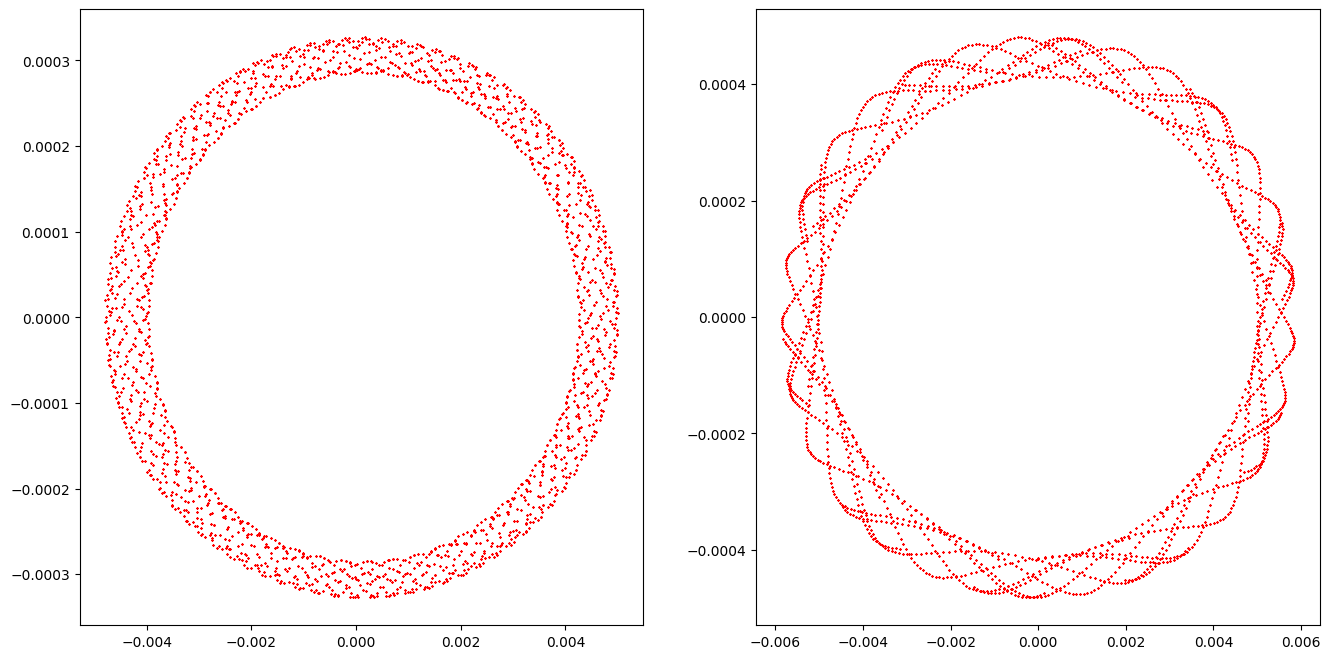

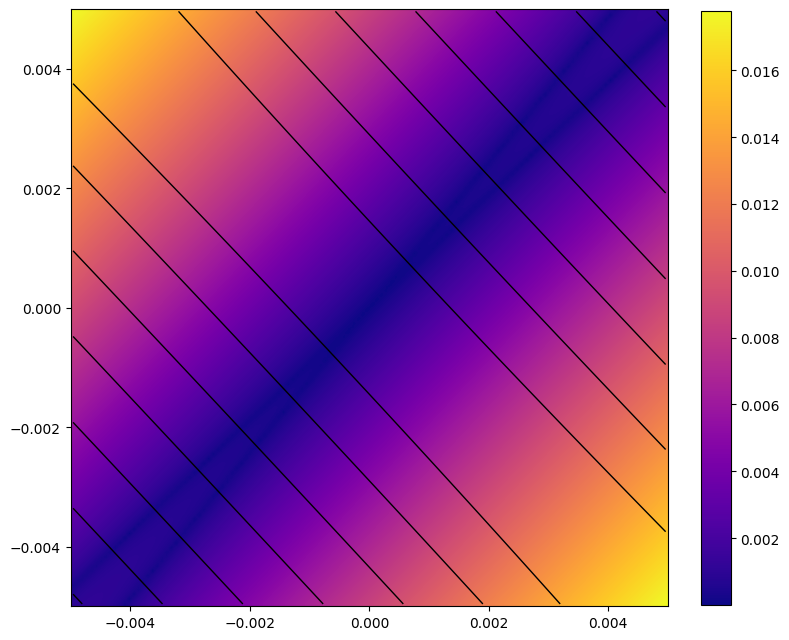

[6]:

# Compare phase space trajectories

# Note, change order to observe convergence

plt.figure(figsize=(10, 10))

# Direct tracking

x = torch.linspace(0.0, 5.0, 10, dtype=dtype, device=device)

x = torch.stack([x, *3*[torch.zeros_like(x)]]).T

count = 512

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: ring(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qx, px, *_ = table

for q, p in zip(qx.cpu().numpy(), px.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Table tracking

# Note, table representation is not symplectic

x = torch.linspace(0.0, 5.0, 10, dtype=dtype, device=device)

x = torch.stack([x, *3*[torch.zeros_like(x)]]).T

count = 512

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: evaluate(t, [x]))(x)

table = torch.stack(table).swapaxes(0, -1)

qx, px, *_ = table

for q, p in zip(qx.cpu().numpy(), px.cpu().numpy()):

plt.scatter(q, p, color='red', marker='x', s=1)

plt.show()

Example-06: Fixed point

[1]:

# In this example fixed points are computed for a simple symplectic nonlinear transformation

# Fixed point are computed with Newton root search

[2]:

# Import

import numpy

import torch

from ndmap.pfp import fixed_point

from ndmap.pfp import clean_point

from ndmap.pfp import chain_point

from ndmap.pfp import matrix

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set forward & inverse mappings

mu = 2.0*numpy.pi*torch.tensor(1/3 - 0.01, dtype=dtype)

kq, ks, ko = torch.tensor([0.0, 0.25, -0.25], dtype=dtype)

def forward(x):

q, p = x

q, p = q*mu.cos() + p*mu.sin(), p*mu.cos() - q*mu.sin()

q, p = q, p + (kq*q + ks*q**2 + ko*q**3)

return torch.stack([q, p])

def inverse(x):

q, p = x

q, p = q, p - (kq*q + ks*q**2 + ko*q**3)

q, p = q*mu.cos() - p*mu.sin(), p*mu.cos() + q*mu.sin()

return torch.stack([q, p])

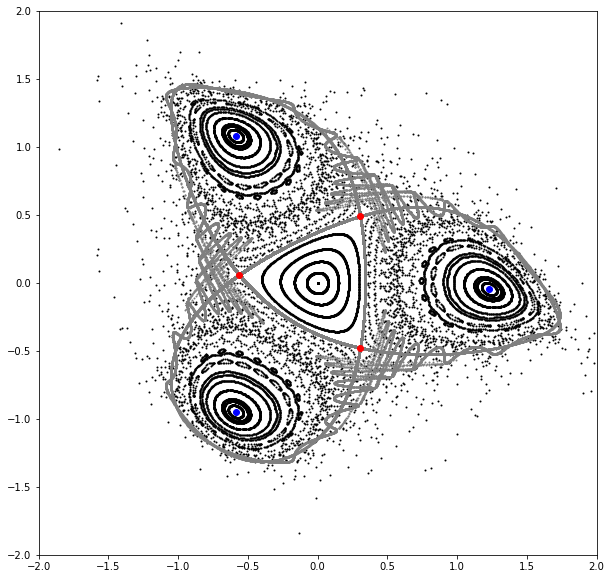

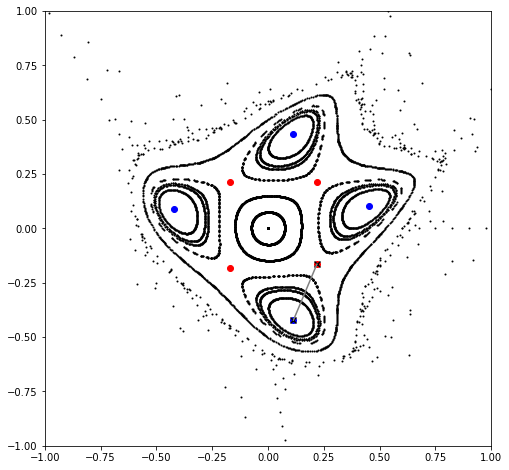

[5]:

# Compute period three fixed points

# Set fixed point period

period = 3

# Set tolerance epsilon

epsilon = 1.0E-12

# Set random initial points

points = 4.0*torch.rand((128, 2), dtype=dtype, device=device) - 2.0

# Perform 512 root search iterations for each initial point

points = torch.func.vmap(lambda point: fixed_point(512, forward, point, power=period))(points)

# Clean points (remove nans, duplicates, points from the same chain)

points = clean_point(period, forward, points, epsilon=epsilon)

# Generate fixed point chains

chains = torch.func.vmap(lambda point: chain_point(period, forward, point))(points)

# Classify fixed point chains (elliptic vs hyperbolic)

# Generate initials for hyperbolic fixed points using corresponding eigenvectors

kinds = []

for chain in chains:

point, *_ = chain

values, vectors = torch.linalg.eig(matrix(period, forward, point))

kind = all(values.log().real < epsilon)

kinds.append(kind)

if not kind:

lines = [point + vector*torch.linspace(-epsilon, +epsilon, 1024, dtype=dtype).reshape(-1, 1) for vector in vectors.real.T]

lines = torch.stack(lines)

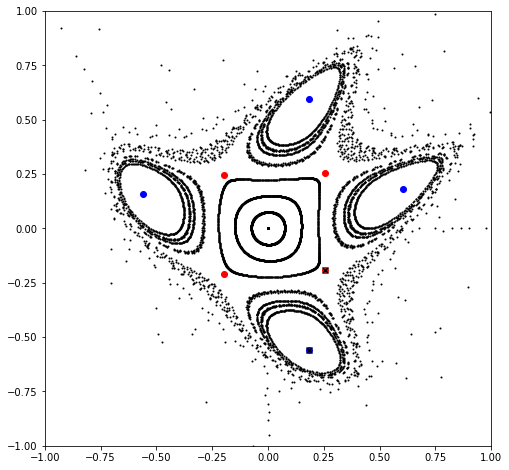

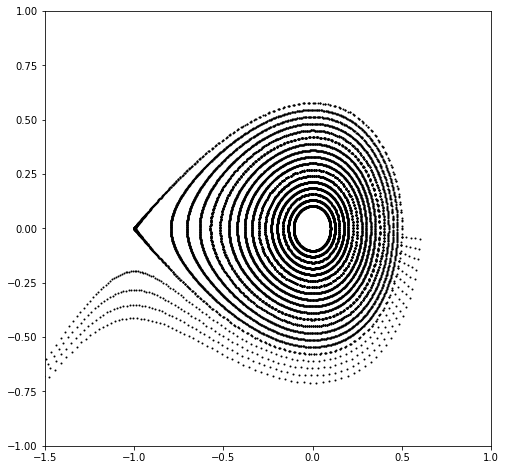

# Plot phase space

x = torch.linspace(0.0, 1.5, 21, dtype=dtype, device=device)

x = torch.stack([x, torch.zeros_like(x)]).T

count = 1024

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: forward(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(10, 10))

plt.xlim(-2.0, 2.0)

plt.ylim(-2.0, 2.0)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Plot (approximated) stable and unstable manifolds of hyperbolic fixed points

count = 310

for line in lines:

x = torch.clone(line)

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: forward(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='gray', marker='o', s=1)

x = torch.clone(line)

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: inverse(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='gray', marker='o', s=1)

# Plot chains

for chain, kind in zip(chains, kinds):

plt.scatter(*chain.T, color = {True:'blue', False:'red'}[kind], marker='o')

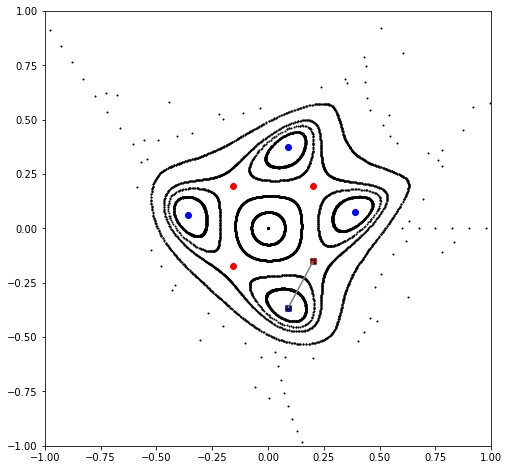

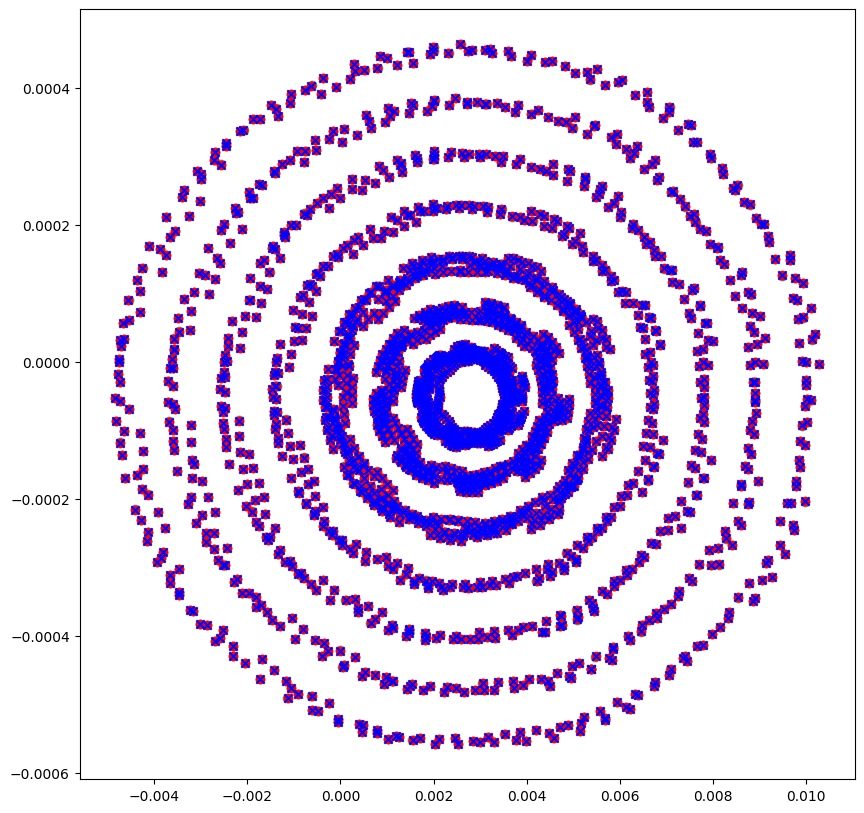

[6]:

# Set mapping around elliptic fixed point

point, *_ = chains[kinds].squeeze()

def mapping(x):

x = x + point

for _ in range(period):

x = forward(x)

x = x - point

return x

# Test mapping

x = torch.zeros_like(point)

print(x)

print(mapping(x))

# Plot phase space

x = torch.linspace(0.0, 1.5, 21, dtype=dtype, device=device)

x = torch.stack([x, torch.zeros_like(x)]).T

count = 1024

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: forward(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(10, 10))

plt.xlim(-2.0, 2.0)

plt.ylim(-2.0, 2.0)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

x = torch.linspace(0.0, 0.5, 11, dtype=dtype, device=device)

x = torch.stack([x, torch.zeros_like(x)]).T

count = 1024

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: mapping(x))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table + point.reshape(2, 1, 1)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='red', marker='o', s=1)

tensor([0., 0.], dtype=torch.float64)

tensor([-1.110223024625e-16, 0.000000000000e+00], dtype=torch.float64)

Example-07: Parametric fixed point

[1]:

# Given a mapping depending on a set of knobs (parameters), parametric fixed points can be computed (position of a fixed point as function of parameters)

# Parametric fixed points can be used to construct responce matrices, e.g. closed orbit responce

# In this case only first order derivatives of the fixed point(s) with respect to parameters are computed

# Or higher order expansions can be computed

# In this example parametric fixed points of a symplectic mapping are computed

[2]:

# Import

import numpy

import torch

from ndmap.util import flatten

from ndmap.evaluate import evaluate

from ndmap.propagate import propagate

from ndmap.pfp import fixed_point

from ndmap.pfp import clean_point

from ndmap.pfp import chain_point

from ndmap.pfp import matrix

from ndmap.pfp import parametric_fixed_point

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set mapping

def mapping(x, k):

q, p = x

a, b = k

q, p = q*mu.cos() + p*mu.sin(), p*mu.cos() - q*mu.sin()

return torch.stack([q, p + a*q**2 + b*q**3])

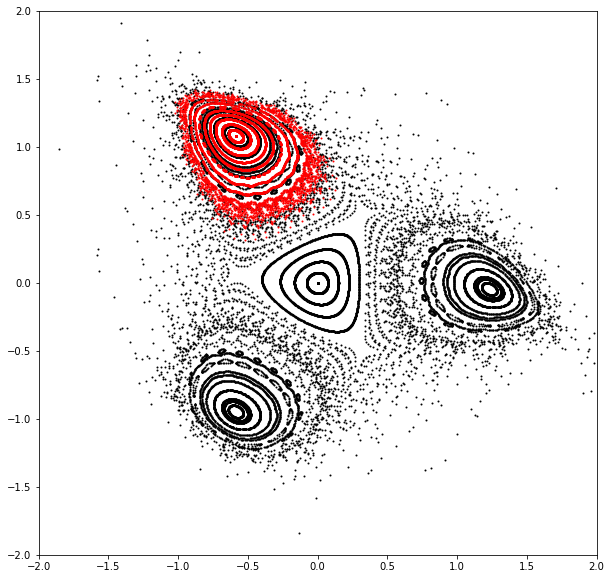

[5]:

# Compute dynamical fixed points

# Note, fixed point might fail due to escape to large values

# Set parameters

mu = 2.0*numpy.pi*torch.tensor(1/5 - 0.01, dtype=dtype, device=device)

k = torch.tensor([0.25, -0.25], dtype=dtype, device=device)

# Compute and plot phase space trajectories

x = torch.linspace(0.0, 1.5, 21, dtype=dtype)

x = torch.stack([x, torch.zeros_like(x)]).T

count = 1024

table = []

for _ in range(count):

table.append(x)

x = torch.func.vmap(lambda x: mapping(x, k))(x)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

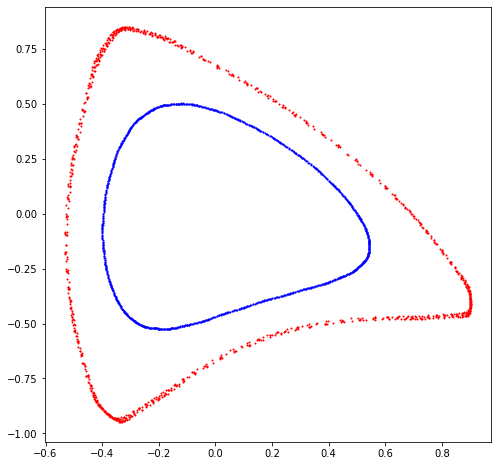

plt.figure(figsize=(10, 10))

plt.xlim(-2.0, 2.0)

plt.ylim(-2.0, 2.0)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Set tolerance epsilon

epsilon = 1.0E-12

# Compute chains

period = 5

points = torch.rand((32, 2), dtype=dtype, device=device)

points = torch.func.vmap(lambda point: fixed_point(16, mapping, point, k, power=period))(points)

points = clean_point(period, mapping, points, k, epsilon=epsilon)

chains = torch.func.vmap(lambda point: chain_point(period, mapping, point, k))(points)

# Plot chains

for chain in chains:

point, *_ = chain

value, vector = torch.linalg.eig(matrix(period, mapping, point, k))

color = 'blue' if all(value.log().real < epsilon) else 'red'

plt.scatter(*chain.T, color=color, marker='o')

if color == 'blue':

ep, *_ = chain

else:

hp, *_ = chain

plt.show()

[6]:

# Compute hyperbolic fixed point for a set of knobs

dks = torch.stack(2*[torch.linspace(0.0, 0.01, 101, dtype=dtype, device=device)]).T

fps = [hp]

for dk in dks:

*_, initial = fps

fps.append(fixed_point(16, mapping, initial, k + dk, power=period))

fps = torch.stack(fps)

[7]:

# Compute parametric fixed point

# Set computation order

# Note, change order to observe convergence

order = 4

pfp = parametric_fixed_point((order, ), hp, [k], mapping, power=period)

# Set period mapping and check fixed point propagation

def function(x, k):

for _ in range(period):

x = mapping(x, k)

return x

out = propagate((2, 2), (0, order), pfp, [k], function)

for x, y in zip(flatten(pfp, target=list), flatten(out, target=list)):

print(torch.allclose(x, y))

True

True

True

True

True

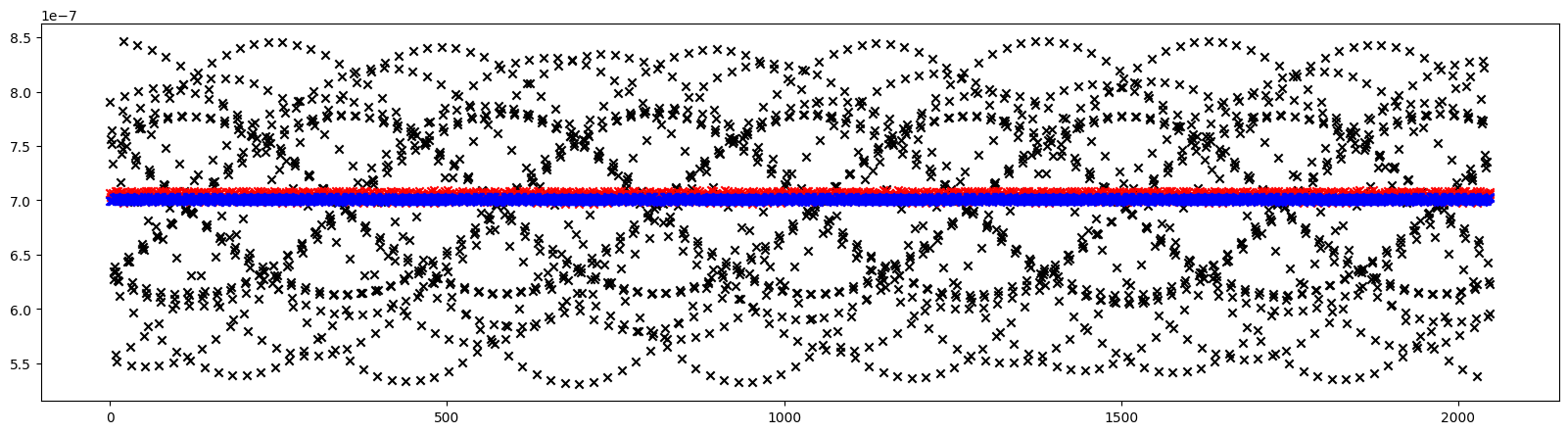

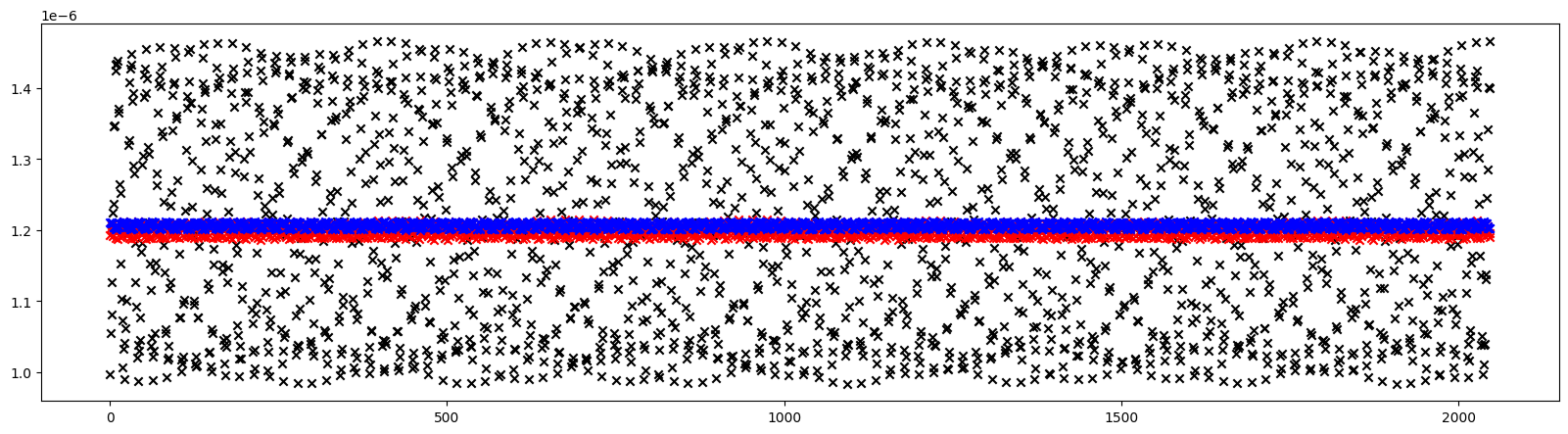

[8]:

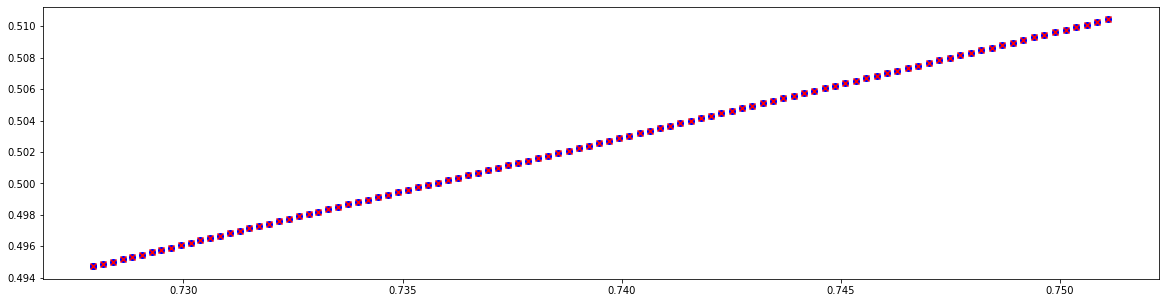

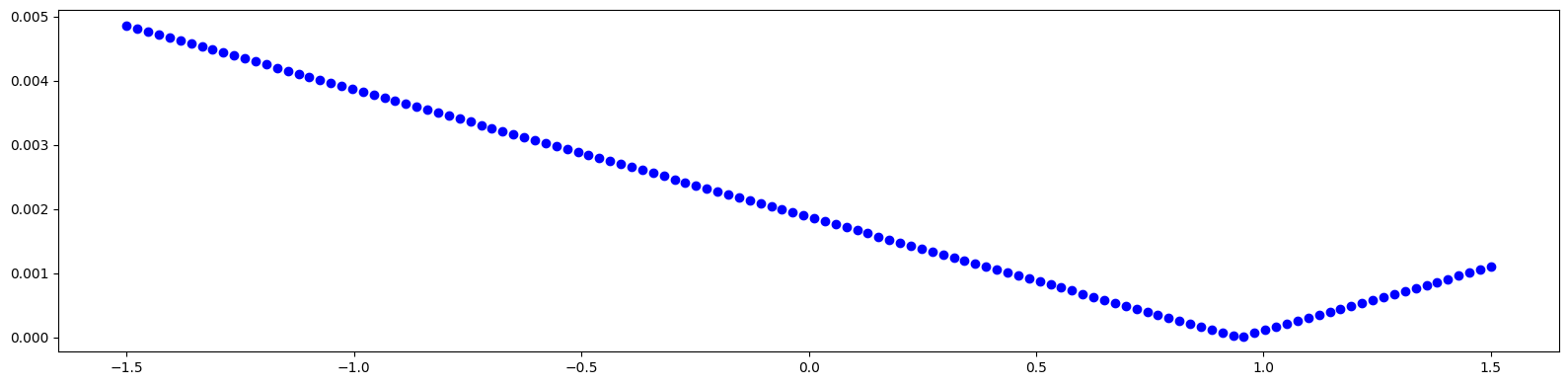

# Plot parametric fixed point position for a given set of knobs

out = torch.func.vmap(lambda dk: evaluate(pfp, [hp, dk]))(dks)

plt.figure(figsize=(20, 5))

plt.scatter(*fps.T.cpu().numpy(), color='blue', marker='o')

plt.scatter(*out.T.cpu().numpy(), color='red', marker='x')

plt.show()

Example-08: Fixed point manipulation (collision)

[1]:

# In this example the distance between a pair of hyperbolic and elliptic fixed points is minimized

# First, using a set of initial guesses within a region, a pair is obtained

# For a given pair, first order parametric dependence of fixed point positions is computed

# Gradient of the distance function between the points is computed (GD minimization)

[2]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.evaluate import evaluate

from ndmap.pfp import fixed_point

from ndmap.pfp import clean_point

from ndmap.pfp import chain_point

from ndmap.pfp import matrix

from ndmap.pfp import parametric_fixed_point

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set mapping

limit = 8

phase = 2.0*numpy.pi*(1/4 + 0.005)

phase = torch.tensor(phase/(limit + 1), dtype=dtype, device=device)

def mapping(state, knobs):

q, p = state

for index in range(limit):

q, p = q*phase.cos() + p*phase.sin(), p*phase.cos() - q*phase.sin()

q, p = q, p + knobs[index]*q**2

q, p = q*phase.cos() + p*phase.sin(), p*phase.cos() - q*phase.sin()

q, p = q, p + q**2

return torch.stack([q, p])

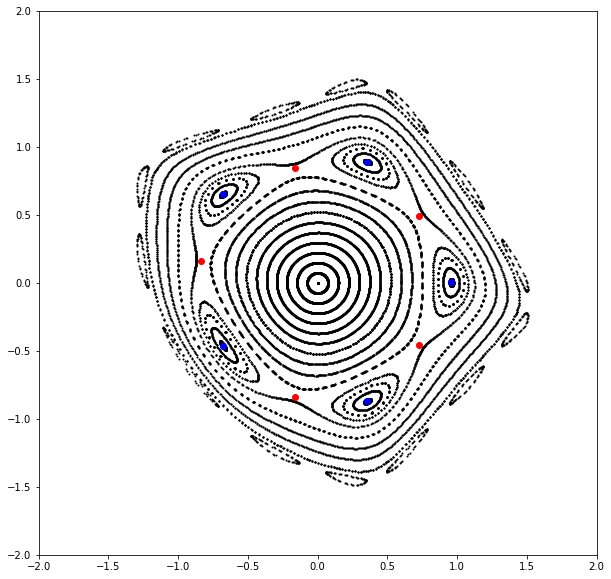

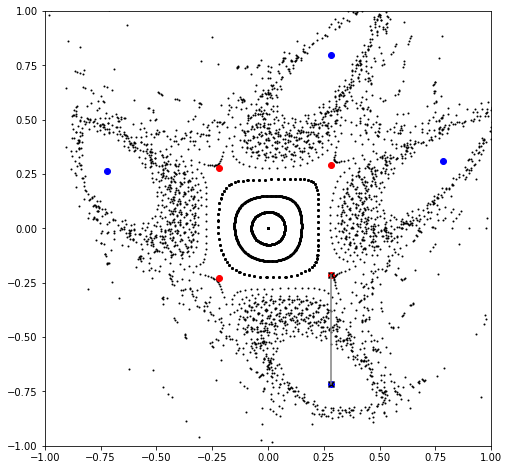

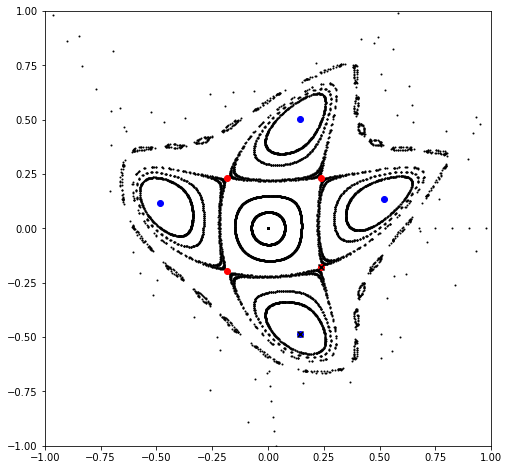

[5]:

# Locate fixed points and select a pair

# Set initial knobs

knobs = torch.tensor(limit*[0.0], dtype=dtype, device=device)

# Compute and plot phase space trajectories

state = torch.linspace(0.0, 1.5, 21, dtype=dtype)

state = torch.stack([state, torch.zeros_like(state)]).T

count = 1024

table = []

for _ in range(count):

table.append(state)

state = torch.func.vmap(lambda state: mapping(state, knobs))(state)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(8, 8))

plt.xlim(-1., 1.)

plt.ylim(-1., 1.)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Set tolerance epsilon

epsilon = 1.0E-12

# Compute chains

period = 4

points = 4.0*torch.rand((512, 2), dtype=dtype, device=device) - 2.0

points = torch.func.vmap(lambda point: fixed_point(64, mapping, point, knobs, power=period))(points)

points = clean_point(period, mapping, points, knobs, epsilon=epsilon)

chains = torch.func.vmap(lambda point: chain_point(period, mapping, point, knobs))(points)

# Plot chains

for chain in chains:

point, *_ = chain

value, vector = torch.linalg.eig(matrix(period, mapping, point, knobs))

color = 'blue' if all(value.log().real < epsilon) else 'red'

plt.scatter(*chain.T, color=color, marker='o')

if color == 'blue':

ep, *_ = chain

else:

hp, *_ = chain

ep_chain, *_ = [chain for chain in chains if ep in chain]

hp_chain, *_ = [chain for chain in chains if hp in chain]

ep, *_ = ep_chain

hp, *_ = hp_chain[(ep - hp_chain).norm(dim=-1) == (ep - hp_chain).norm(dim=-1).min()]

plt.scatter(*ep.cpu().numpy(), color='black', marker='x')

plt.scatter(*hp.cpu().numpy(), color='black', marker='x')

plt.plot(*torch.stack([ep, hp]).T.cpu().numpy(), color='gray')

plt.show()

[6]:

# Compute first order parametric fixed points

order = 1

php = parametric_fixed_point((order, ), hp, [knobs], mapping, power=period)

pep = parametric_fixed_point((order, ), ep, [knobs], mapping, power=period)

[7]:

# Set objective function

def objective(knobs, php, pep):

dhp = evaluate(php, [torch.zeros_like(knobs), knobs])

dep = evaluate(pep, [torch.zeros_like(knobs), knobs])

return (dep - dhp).norm()

[8]:

# Set learning rate and update knobs

lr = 0.0025

gradient = derivative(1, objective, knobs, php, pep, intermediate=False)

knobs -= lr*gradient

[9]:

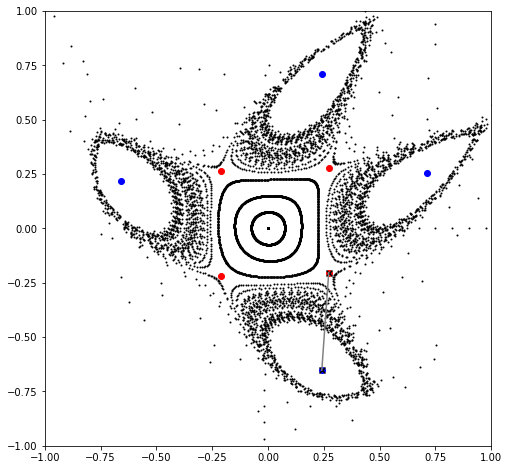

# Iterate

# Set number of iterations

nitr = 5

# Loop

for intr in range(nitr):

# Compute and plot phase space trajectories

state = torch.linspace(0.0, 1.5, 21, dtype=dtype)

state = torch.stack([state, torch.zeros_like(state)]).T

table = []

for _ in range(count):

table.append(state)

state = torch.func.vmap(lambda state: mapping(state, knobs))(state)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(8, 8))

plt.xlim(-1., 1.)

plt.ylim(-1., 1.)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Find fixed points near previous values

points = torch.stack([hp, ep])

points = torch.func.vmap(lambda point: fixed_point(64, mapping, point, knobs, power=period))(points)

points = clean_point(period, mapping, points, knobs, epsilon=epsilon)

chains = torch.func.vmap(lambda point: chain_point(period, mapping, point, knobs))(points)

# Plot chains and selected pair

for chain in chains:

point, *_ = chain

value, vector = torch.linalg.eig(matrix(period, mapping, point, knobs))

color = 'blue' if all(value.log().real < epsilon) else 'red'

plt.scatter(*chain.T, color=color, marker='o')

if color == 'blue':

ep, *_ = chain

else:

hp, *_ = chain

ep_chain, *_ = [chain for chain in chains if ep in chain]

hp_chain, *_ = [chain for chain in chains if hp in chain]

ep, *_ = ep_chain

hp, *_ = hp_chain[(ep - hp_chain).norm(dim=-1) == (ep - hp_chain).norm(dim=-1).min()]

plt.scatter(*ep.cpu().numpy(), color='black', marker='x')

plt.scatter(*hp.cpu().numpy(), color='black', marker='x')

plt.plot(*torch.stack([ep, hp]).T.cpu().numpy(), color='gray')

plt.show()

# Recompute parametric fixed points

# Note, not strictly necessary to do at each iteration

php = parametric_fixed_point((order, ), hp, [knobs], mapping, power=period)

pep = parametric_fixed_point((order, ), ep, [knobs], mapping, power=period)

# Update

lr *= 2.0

gradient = derivative(1, objective, knobs, php, pep, intermediate=False)

knobs -= lr*gradient

Example-09: Fixed point manipulation (change point type)

[1]:

# In this example real parts of the eigenvalues of a hyperbolic fixed point are minimized

# First, using a set of initial guesses within a region, a hyperbolic point is located

# Parametric fixed point is computed and propagated

# Propagated table is used as a surrogate model to generate differentible objective

[2]:

# Import

import numpy

import torch

from ndmap.util import nest

from ndmap.derivative import derivative

from ndmap.evaluate import evaluate

from ndmap.evaluate import compare

from ndmap.propagate import identity

from ndmap.propagate import propagate

from ndmap.pfp import fixed_point

from ndmap.pfp import clean_point

from ndmap.pfp import chain_point

from ndmap.pfp import matrix

from ndmap.pfp import parametric_fixed_point

torch.set_printoptions(precision=12, sci_mode=True)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set mapping

limit = 8

phase = 2.0*numpy.pi*(1/4 + 0.005)

phase = torch.tensor(phase/(limit + 1), dtype=dtype, device=device)

def mapping(state, knobs):

q, p = state

for index in range(limit):

q, p = q*phase.cos() + p*phase.sin(), p*phase.cos() - q*phase.sin()

q, p = q, p + knobs[index]*q**2

q, p = q*phase.cos() + p*phase.sin(), p*phase.cos() - q*phase.sin()

q, p = q, p + q**2

return torch.stack([q, p])

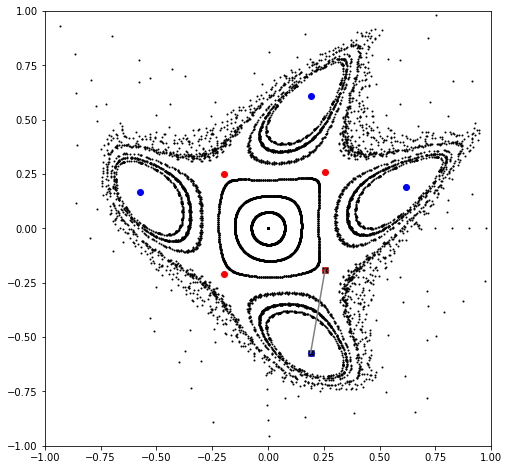

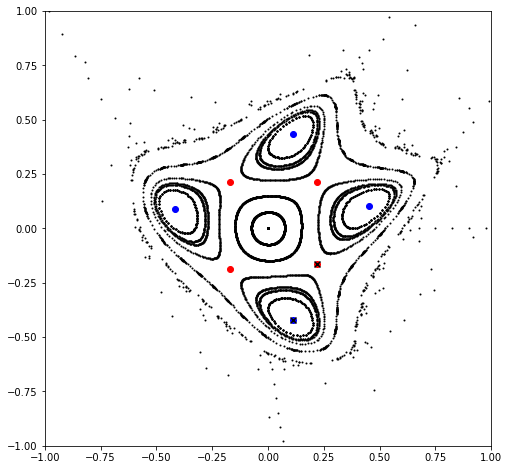

[5]:

# Locate fixed points and select a pair

# Set initial knobs

knobs = torch.tensor(limit*[0.0], dtype=dtype, device=device)

# Compute and plot phase space trajectories

state = torch.linspace(0.0, 1.5, 21, dtype=dtype)

state = torch.stack([state, torch.zeros_like(state)]).T

count = 1024

table = []

for _ in range(count):

table.append(state)

state = torch.func.vmap(lambda state: mapping(state, knobs))(state)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(8, 8))

plt.xlim(-1., 1.)

plt.ylim(-1., 1.)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Set tolerance epsilon

epsilon = 1.0E-12

# Compute chains

period = 4

points = 4.0*torch.rand((512, 2), dtype=dtype, device=device) - 2.0

points = torch.func.vmap(lambda point: fixed_point(64, mapping, point, knobs, power=period))(points)

points = clean_point(period, mapping, points, knobs, epsilon=epsilon)

chains = torch.func.vmap(lambda point: chain_point(period, mapping, point, knobs))(points)

# Plot chains

for chain in chains:

point, *_ = chain

value, vector = torch.linalg.eig(matrix(period, mapping, point, knobs))

color = 'blue' if all(value.log().real < epsilon) else 'red'

plt.scatter(*chain.T, color=color, marker='o')

if color == 'blue':

ep, *_ = chain

else:

hp, *_ = chain

ep_chain, *_ = [chain for chain in chains if ep in chain]

hp_chain, *_ = [chain for chain in chains if hp in chain]

ep, *_ = ep_chain

hp, *_ = hp_chain[(ep - hp_chain).norm(dim=-1) == (ep - hp_chain).norm(dim=-1).min()]

plt.scatter(*ep.cpu().numpy(), color='black', marker='x')

plt.scatter(*hp.cpu().numpy(), color='black', marker='x')

plt.show()

[6]:

# Matrix around (dynamical) fixed points

# Note, eigenvalues of a hyperbolic fixed point are not on the unit circle

em = matrix(period, mapping, ep, knobs)

print(em)

print(torch.linalg.eigvals(em).log().real)

print()

hm = matrix(period, mapping, hp, knobs)

print(hm)

print(torch.linalg.eigvals(hm).log().real)

print()

tensor([[2.739351528140e-01, -1.149307994931e+00],

[5.971467208895e-01, 1.145141455240e+00]], dtype=torch.float64)

tensor([-1.784726318725e-15, -1.784726318725e-15], dtype=torch.float64)

tensor([[1.251182357765e+00, 1.288812994113e-01],

[1.428760927086e-01, 8.139613303868e-01]], dtype=torch.float64)

tensor([2.545448611841e-01, -2.545448611841e-01], dtype=torch.float64)

[7]:

# Compute first order parametric fixed points

order = 1

php = parametric_fixed_point((order, ), hp, [knobs], mapping, power=period)

pep = parametric_fixed_point((order, ), ep, [knobs], mapping, power=period)

[8]:

# Propagate parametric identity table

# Note, propagated table can be used as a surrogate model around (parametric) fixed point

# Here it is used to compute parametric matrix around fixed point and its egenvalues

t = identity((1, 1), [hp, knobs], parametric=php)

t = propagate((2, limit), (1, 1), t, [knobs], nest(period, mapping, knobs))

[9]:

# Set objective function

def objective(knobs):

hm = derivative(1, lambda x, k: evaluate(t, [x, k]), hp, knobs, intermediate=False)

return torch.linalg.eigvals(hm).log().real.abs().sum()

[10]:

# Initial objective value

print(objective(knobs))

tensor(5.090897223682e-01, dtype=torch.float64)

[11]:

# Objective gradient

print(derivative(1, objective, knobs, intermediate=False))

tensor([-1.974190541982e-01, -4.137846718749e-01, -5.970747283789e-01, -7.018818743275e-01,

-7.018818743275e-01, -5.970747283789e-01, -4.137846718749e-01, -1.974190541982e-01],

dtype=torch.float64)

[12]:

# Set learning rate and update knobs

lr = 0.01

gradient = derivative(1, objective, knobs, intermediate=False)

knobs -= lr*gradient

[13]:

# Iterate

# Set number of iterations

nitr = 5

# Loop

for intr in range(nitr):

state = torch.linspace(0.0, 1.5, 21, dtype=dtype)

state = torch.stack([state, torch.zeros_like(state)]).T

count = 1024

table = []

for _ in range(count):

table.append(state)

state = torch.func.vmap(lambda state: mapping(state, knobs))(state)

table = torch.stack(table).swapaxes(0, -1)

qs, ps = table

plt.figure(figsize=(8, 8))

plt.xlim(-1., 1.)

plt.ylim(-1., 1.)

for q, p in zip(qs.cpu().numpy(), ps.cpu().numpy()):

plt.scatter(q, p, color='black', marker='o', s=1)

# Set tolerance epsilon

epsilon = 1.0E-12

# Compute chains

period = 4

points = torch.stack([hp, ep])

points = torch.func.vmap(lambda point: fixed_point(64, mapping, point, knobs, power=period))(points)

points = clean_point(period, mapping, points, knobs, epsilon=epsilon)

chains = torch.func.vmap(lambda point: chain_point(period, mapping, point, knobs))(points)

# Plot chains

for chain in chains:

point, *_ = chain

value, vector = torch.linalg.eig(matrix(period, mapping, point, knobs))

color = 'blue' if all(value.log().real < epsilon) else 'red'

plt.scatter(*chain.T, color=color, marker='o')

if color == 'blue':

ep, *_ = chain

else:

hp, *_ = chain

ep_chain, *_ = [chain for chain in chains if ep in chain]

hp_chain, *_ = [chain for chain in chains if hp in chain]

ep, *_ = ep_chain

hp, *_ = hp_chain[(ep - hp_chain).norm(dim=-1) == (ep - hp_chain).norm(dim=-1).min()]

plt.scatter(*ep.cpu().numpy(), color='black', marker='x')

plt.scatter(*hp.cpu().numpy(), color='black', marker='x')

plt.show()

print(objective(knobs).item())

# Compute parametric fixed points

php = parametric_fixed_point((order, ), hp, [knobs], mapping, power=period)

pep = parametric_fixed_point((order, ), ep, [knobs], mapping, power=period)

# Propagate parametric fixed points

t = identity((1, 1), [hp, knobs], parametric=php)

t = propagate((2, limit), (1, 1), t, [knobs], nest(period, mapping, knobs))

# Update

lr += 0.005

gradient = derivative(1, objective, knobs, intermediate=False)

knobs = knobs - lr*gradient

0.4875424149522949

0.44253915640171704

0.3947019262885917

0.34869831355705994

0.3054166976781556

Example-10: Alignment indices chaos indicators

[1]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

torch.set_printoptions(precision=8, sci_mode=True, linewidth=128)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[2]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[3]:

# Set fixed parameters

a1, b1 = 0, 1

a2, b2 = 0, 1

f1 = torch.tensor(2.0*numpy.pi*0.38, dtype=dtype, device=device)

f2 = torch.tensor(2.0*numpy.pi*0.41, dtype=dtype, device=device)

cf1, sf1 = f1.cos(), f1.sin()

cf2, sf2 = f2.cos(), f2.sin()

[4]:

# Set 4D symplectic mapping

def mapping(x):

q1, p1, q2, p2 = x

return torch.stack([

b1*(p1 + (q1**2 - q2**2))*sf1 + q1*(cf1 + a1*sf1),

-((q1*(1 + a1**2)*sf1)/b1) + (p1 + (q1**2 - q2**2))*(cf1 - a1*sf1),

q2*cf2 + (p2*b2 + q2*(a2 - 2*q1*b2))*sf2,

-((q2*(1 + a2**2)*sf2)/b2) + (p2 - 2*q1*q2)*(cf2 - a2*sf2)

])

[5]:

# Set 4D symplectic mapping with tangent dynamics

def tangent(x, vs):

x, m = derivative(1, mapping, x)

vs = torch.func.vmap(lambda v: m @ v)(vs)

return x, vs/vs.norm(dim=-1, keepdim=True)

[6]:

# Set generalized alignment indices computation

# Note, if number if vectors is equal to two, the index tends towards zero for regular orbits

# And tends towards a constant value for chaotic motion

# If the number of vectors is greater than two, index tends towards zero for all cases

# But for chaotic orbits, zero is reached (exponentialy) faster

def gali(vs, threshold=1.0E-12):

return (threshold + torch.linalg.svdvals(vs).prod()).log10()

[7]:

# First, consider two initial conditions (regular and chaotic)

count = 1024

plt.figure(figsize=(8, 8))

x = torch.tensor([0.50000, 0.0, 0.05, 0.0], dtype=dtype)

orbit = []

for _ in range(count):

x = mapping(x)

orbit.append(x)

q, p, *_ = torch.stack(orbit).T

plt.scatter(q, p, s =1, color='blue')

x = torch.tensor([0.68925, 0.0, 0.10, 0.0], dtype=dtype)

orbit = []

for _ in range(count):

x = mapping(x)

orbit.append(x)

q, p, *_ = torch.stack(orbit).T

plt.scatter(q, p, s =1, color='red')

plt.show()

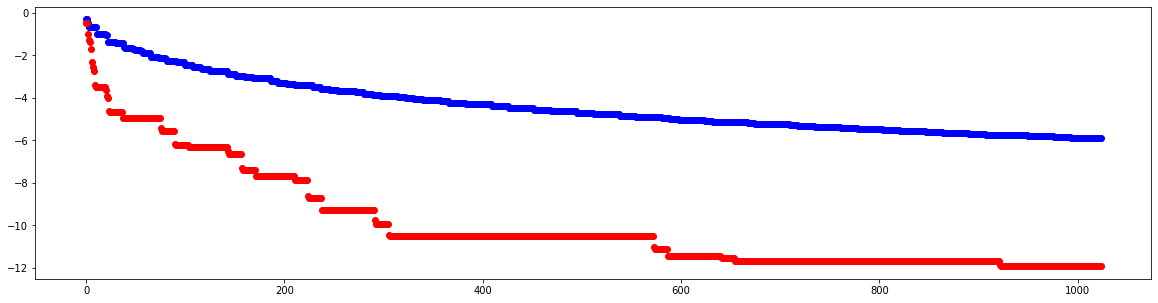

[8]:

# Compute and plot the last gali index at each iteration

# Note, running minimum is appended at each iteration

plt.figure(figsize=(20, 5))

x = torch.tensor([0.50000, 0.0, 0.05, 0.0], dtype=dtype, device=device)

vs = torch.eye(4, dtype=dtype, device=device)

out = []

for _ in range(count):

x, vs = tangent(x, vs)

res = gali(vs)

out.append(res if not out else min(res, out[-1]))

out = torch.stack(out)

plt.scatter(range(count), out, color='blue', marker='o')

x = torch.tensor([0.68925, 0.0, 0.10, 0.0], dtype=dtype, device=device)

vs = torch.eye(4, dtype=dtype, device=device)

out = []

for _ in range(count):

x, vs = tangent(x, vs)

res = gali(vs)

out.append(res if not out else min(res, out[-1]))

out = torch.stack(out)

plt.scatter(range(count), out, color='red', marker='o')

plt.show()

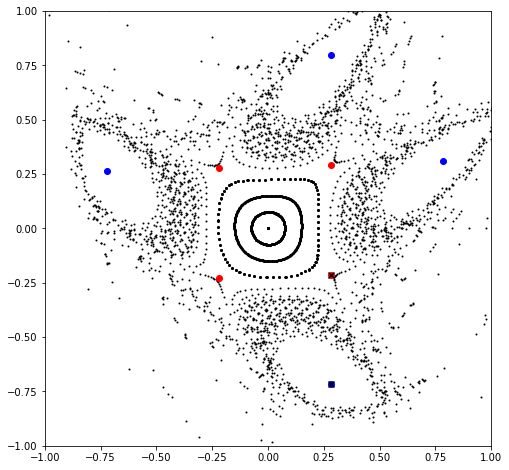

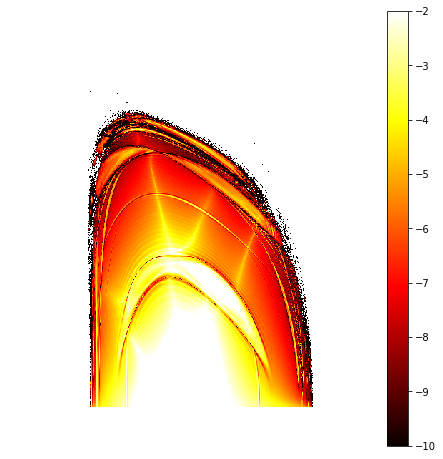

[9]:

# Compute indicator using all avaliable vectors for a grid of initial conditions

def gali(vs):

return torch.linalg.svdvals(vs.nan_to_num()).prod()

# Set grid

n1 = 501

n2 = 501

q1 = torch.linspace(-1.0, +1.0, n1, dtype=dtype, device=device)

q2 = torch.linspace(+0.0, +1.0, n2, dtype=dtype, device=device)

qs = torch.stack(torch.meshgrid(q1, q2, indexing='ij')).swapaxes(-1, 0).reshape(n1*n2, -1)

ps = torch.full_like(qs, 1.0E-10)

q1, q2, p1, p2 = torch.hstack([qs, ps]).T

vs = torch.tensor(n1*n2*[torch.eye(4).tolist()], dtype=dtype, device=device)

qs = torch.stack([q1, p1, q2, p2]).T

# Set tast

# Perform 512 iterations, compute min of indicator value over the next 64 iterations

def task(qs, vs, count=512, total=64, level=1.0E-10):

for _ in range(count):

qs, vs = tangent(qs, vs)

out = []

for _ in range(total):

qs, vs = tangent(qs, vs)

out.append(gali(vs))

return (torch.stack(out).min() + level*torch.sign(qs.norm())).log10()

# Compute and clean data

out = torch.vmap(task)(qs, vs)

out = out.nan_to_num(neginf=0.0)

out[(out >= -2.0)*(out != 0.0)] = -2.0

out[out == 0.0] = torch.nan

out = out.reshape(n1, n2)

# Plot

plt.figure(figsize=(8, 8))

plt.imshow(

out.cpu().numpy(),

vmin=-10.0,

vmax=-2.0,

aspect='equal',

origin='lower',

cmap='hot',

interpolation='nearest')

plt.colorbar()

plt.axis('off')

plt.show()

Example-11: Closed orbit (dispersion)

[1]:

# In this example derivatives of closed orbit with respect to momentum deviation are computed

[2]:

# Import

import numpy

import torch

from ndmap.signature import chop

from ndmap.evaluate import evaluate

from ndmap.evaluate import compare

from ndmap.series import series

from ndmap.propagate import identity

from ndmap.propagate import propagate

from ndmap.pfp import fixed_point

from ndmap.pfp import parametric_fixed_point

torch.set_printoptions(precision=8, sci_mode=True, linewidth=128)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[3]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[4]:

# Set elements

def drif(x, w, l):

(qx, px, qy, py), (w, ), l = x, w, l

return torch.stack([qx + l*px/(1 + w), px, qy + l*py/(1 + w), py])

def quad(x, w, kq, l, n=50):

(qx, px, qy, py), (w, ), kq, l = x, w, kq, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 2.0*l*kq*qx, py + 2.0*l*kq*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def sext(x, w, ks, l, n=10):

(qx, px, qy, py), (w, ), ks, l = x, w, ks, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 1.0*l*ks*(qx**2 - qy**2), py + 2.0*l*ks*qx*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def bend(x, w, r, kq, ks, l, n=50):

(qx, px, qy, py), (w, ), r, kq, ks, l = x, w, r, kq, ks, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 2.0*l*kq*qx - 1.0*l*ks*(qx**2 - qy**2) + 2.0*l/r**2*(w*r - qx), py + 2.0*l*kq*qy + 2.0*l*ks*qx*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def kick(x, cx, cy):

(qx, px, qy, py) = x

return torch.stack([qx, px + cx, qy, py + cy])

def slip(x, dx, dy):

(qx, px, qy, py) = x

return torch.stack([qx + dx, px, qy + dy, py])

[5]:

# Set transport maps between observation points

# Note, here observation poins are locations between elements, lattice start and lattice end

# An observable (closed orbit) is computed at observation points

# All maps are expected to have identical signature of differentible parameters

# State and momentum deviation in this example

# But each map can have any number of additional args and kwargs after required parameters

def map_01_02(x, w): return quad(x, w, 0.19, 0.50)

def map_02_03(x, w): return drif(x, w, 0.45)

def map_03_04(x, w): return sext(x, w, 0.00, 0.10)

def map_04_05(x, w): return drif(x, w, 0.45)

def map_05_06(x, w): return bend(x, w, 22.92, 0.015, 0.00, 3.0)

def map_06_07(x, w): return drif(x, w, 0.45)

def map_07_08(x, w): return sext(x, w, 0.00, 0.10)

def map_08_09(x, w): return drif(x, w, 0.45)

def map_09_10(x, w): return quad(x, w, -0.21, 0.50)

def map_10_11(x, w): return quad(x, w, -0.21, 0.50)

def map_11_12(x, w): return drif(x, w, 0.45)

def map_12_13(x, w): return sext(x, w, 0.00, 0.10)

def map_13_14(x, w): return drif(x, w, 0.45)

def map_14_15(x, w): return bend(x, w, 22.92, 0.015, 0.00, 3.0)

def map_15_16(x, w): return drif(x, w, 0.45)

def map_16_17(x, w): return sext(x, w, 0.00, 0.10)

def map_17_18(x, w): return drif(x, w, 0.45)

def map_18_19(x, w): return quad(x, w, 0.19, 0.50)

transport = [

map_01_02,

map_02_03,

map_03_04,

map_04_05,

map_05_06,

map_06_07,

map_07_08,

map_08_09,

map_09_10,

map_10_11,

map_11_12,

map_12_13,

map_13_14,

map_14_15,

map_15_16,

map_16_17,

map_17_18,

map_18_19

]

# Define one-turn transport

def fodo(x, w):

for mapping in transport:

x = mapping(x, w)

return x

[6]:

# The first step is to compute dynamical fixed point

# Set initial guess

# Note, in this example zero is a fixed point

x = torch.tensor([0.0, 0.0, 0.0, 0.0], dtype=dtype, device=device)

# Set knobs

w = torch.tensor([0.0], dtype=dtype, device=device)

# Find fixed point

fp = fixed_point(16, fodo, x, w, power=1)

print(fp)

tensor([0., 0., 0., 0.], dtype=torch.float64)

[7]:

# Compute parametric closed orbit

pfp = parametric_fixed_point((2, ), fp, [w], fodo)

chop(pfp)

# Print series representation

for key, value in series((4, 1), (0, 2), pfp).items():

print(f'{key}: {value.cpu().numpy()}')

(0, 0, 0, 0, 0): [0. 0. 0. 0.]

(0, 0, 0, 0, 1): [1.81613351 0. 0. 0. ]

(0, 0, 0, 0, 2): [0.56855511 0. 0. 0. ]

[8]:

# Check convergence

print(evaluate(series((4, 1), (0, 0), pfp), [x, w + 1.0E-3]))

print(evaluate(series((4, 1), (0, 1), pfp), [x, w + 1.0E-3]))

print(evaluate(series((4, 1), (0, 2), pfp), [x, w + 1.0E-3]))

print()

out = fixed_point(16, fodo, x, w + 1.0E-3, power=1)

chop([out])

print(out)

print()

tensor([0., 0., 0., 0.], dtype=torch.float64)

tensor([1.81613351e-03, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], dtype=torch.float64)

tensor([1.81670206e-03, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00], dtype=torch.float64)

tensor([ 1.81670185e-03, 1.13882757e-19, -5.50391130e-235, 0.00000000e+00], dtype=torch.float64)

[9]:

# Propagate closed orbit

out = []

jet = identity((0, 1), fp, parametric=pfp)

out.append(jet)

for mapping in transport:

jet = propagate((4, 1), (0, 2), jet, [w], mapping)

out.append(jet)

[10]:

# Check periodicity

print(compare(pfp, jet))

True

[11]:

# Plot 1st order dispersion

pos = [0.00, 0.50, 0.95, 1.05, 1.50, 4.50, 4.95, 5.05, 5.50, 6.00, 6.50, 6.95, 7.05, 7.50, 10.50, 10.95, 11.05, 11.50, 12.00]

res = torch.stack([series((4, 1), (0, 2), jet)[(0, 0, 0, 0, 1)][0] for jet in out])

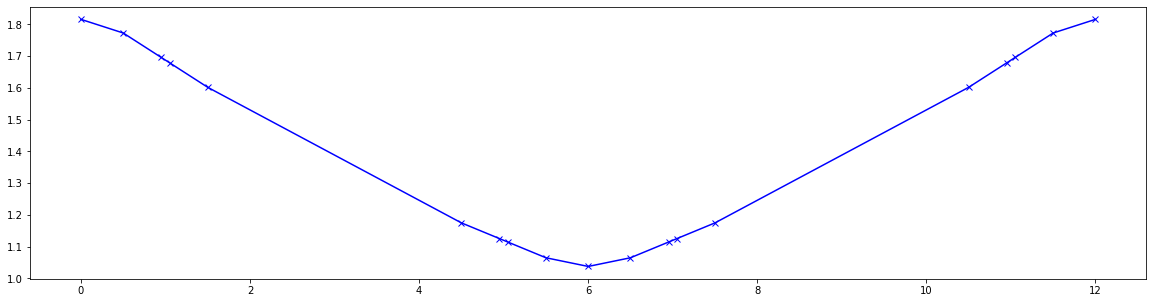

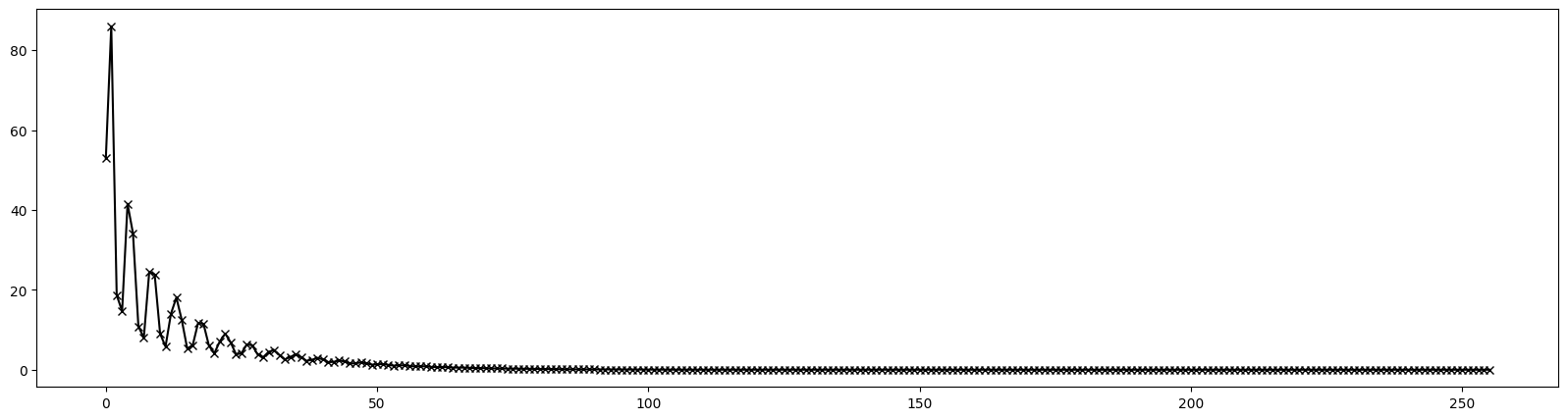

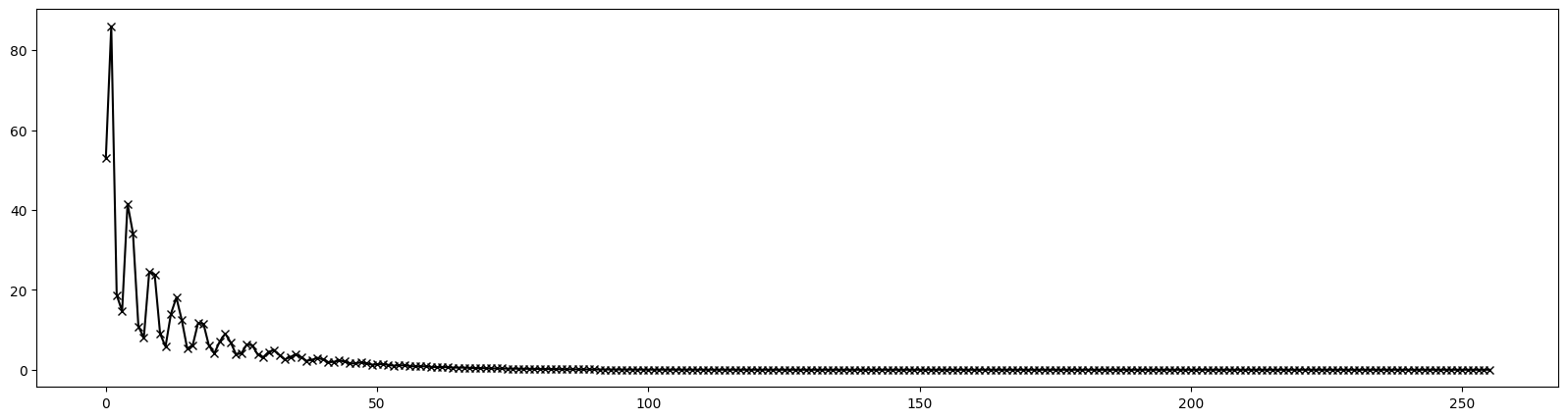

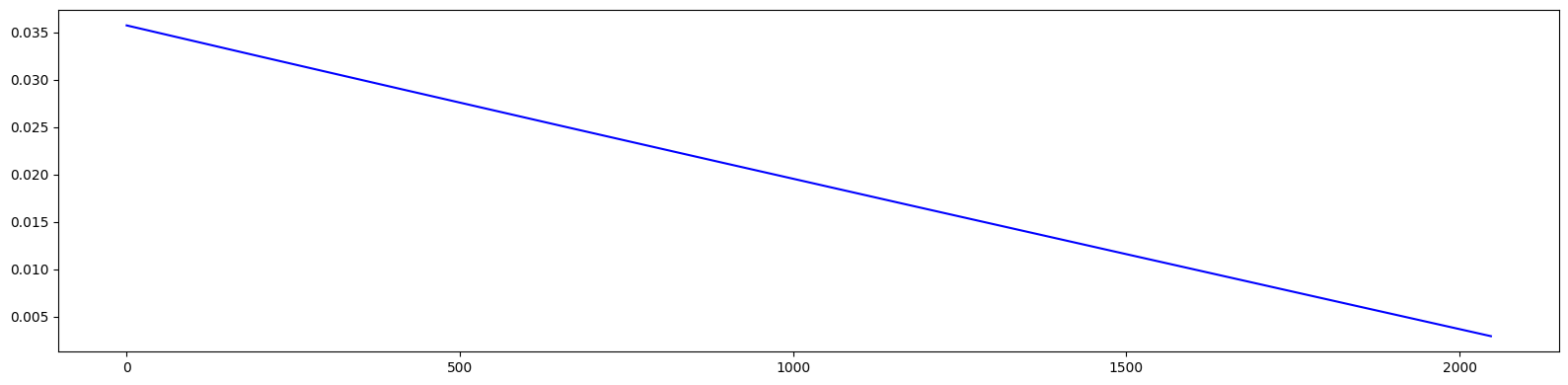

plt.figure(figsize=(20, 5))

plt.plot(pos, res.cpu().numpy(), marker='x', color='blue')

plt.show()

[12]:

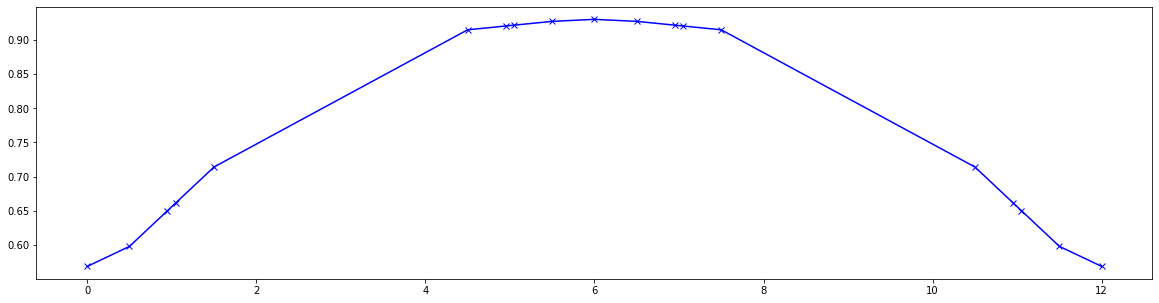

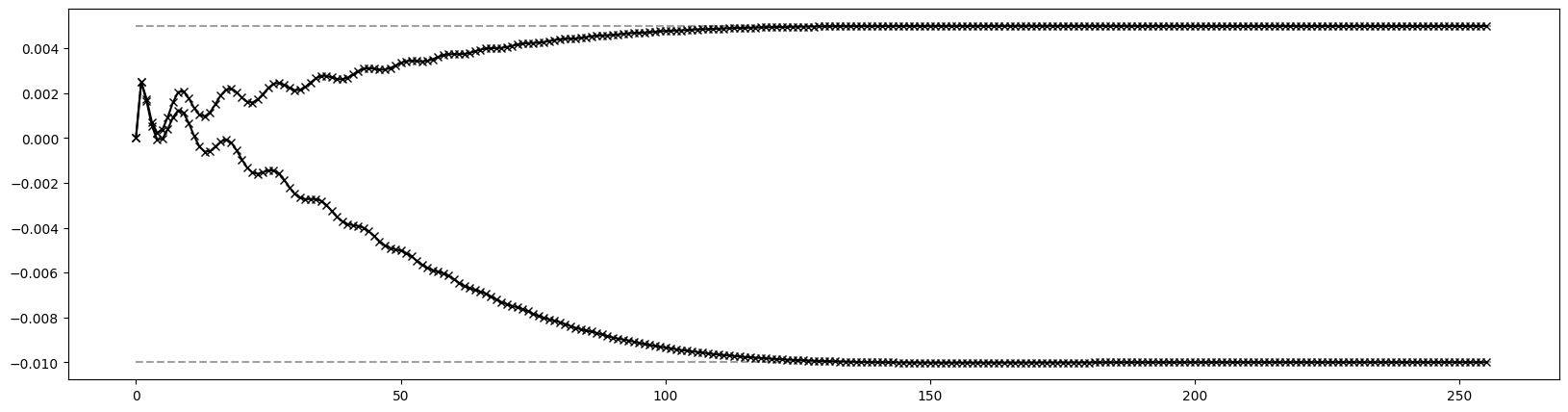

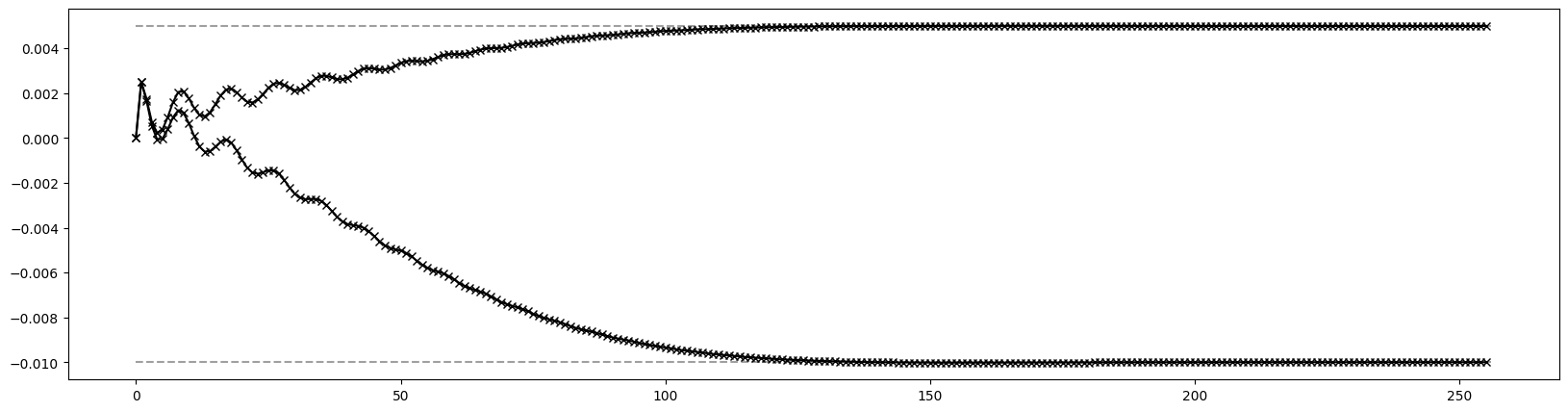

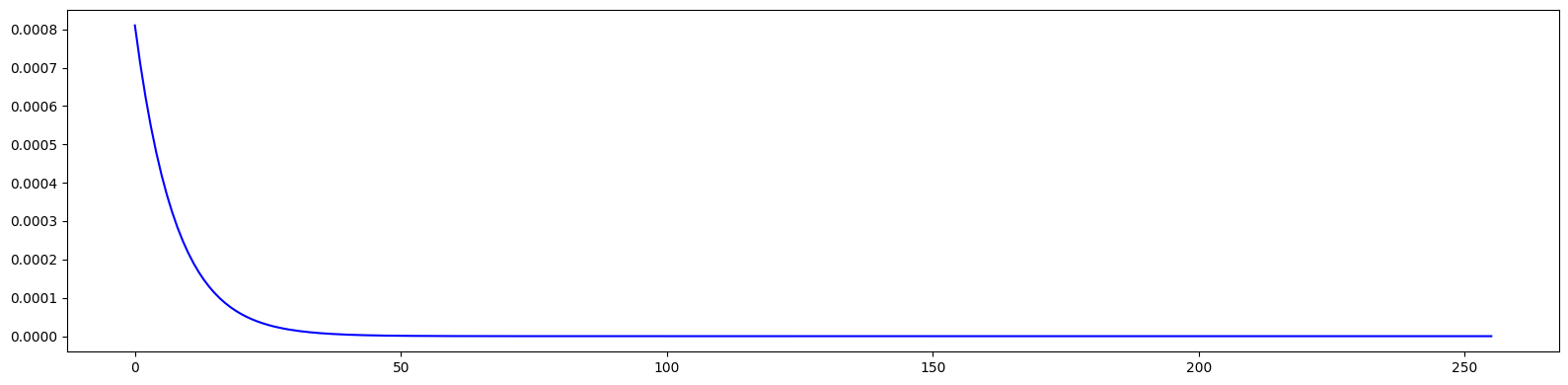

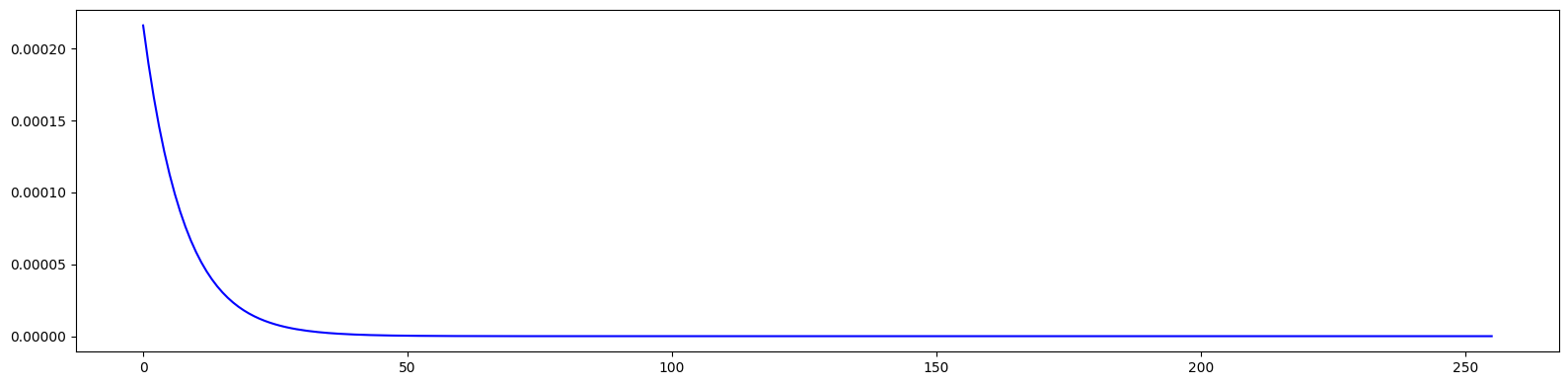

# Plot 2nd order dispersion

pos = [0.00, 0.50, 0.95, 1.05, 1.50, 4.50, 4.95, 5.05, 5.50, 6.00, 6.50, 6.95, 7.05, 7.50, 10.50, 10.95, 11.05, 11.50, 12.00]

res = torch.stack([series((4, 1), (0, 2), jet)[(0, 0, 0, 0, 2)][0] for jet in out])

plt.figure(figsize=(20, 5))

plt.plot(pos, res.cpu().numpy(), marker='x', color='blue')

plt.show()

Example-12: Closed orbit (quadrupole shift)

[1]:

# Import

import numpy

import torch

from ndmap.derivative import derivative

from ndmap.signature import chop

from ndmap.evaluate import evaluate

from ndmap.evaluate import compare

from ndmap.propagate import identity

from ndmap.propagate import propagate

from ndmap.pfp import fixed_point

from ndmap.pfp import parametric_fixed_point

torch.set_printoptions(precision=6, sci_mode=True, linewidth=128)

print(torch.cuda.is_available())

from matplotlib import pyplot as plt

import warnings

warnings.filterwarnings("ignore")

True

[2]:

# Set data type and device

dtype = torch.float64

device = torch.device('cpu')

[3]:

# Set elements

def drif(x, w, l):

(qx, px, qy, py), (w, ), l = x, w, l

return torch.stack([qx + l*px/(1 + w), px, qy + l*py/(1 + w), py])

def quad(x, w, kq, l, n=50):

(qx, px, qy, py), (w, ), kq, l = x, w, kq, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 2.0*l*kq*qx, py + 2.0*l*kq*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def sext(x, w, ks, l, n=10):

(qx, px, qy, py), (w, ), ks, l = x, w, ks, l/(2.0*n)

for _ in range(n):

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

px, py = px - 1.0*l*ks*(qx**2 - qy**2), py + 2.0*l*ks*qx*qy

qx, qy = qx + l*px/(1 + w), qy + l*py/(1 + w)

return torch.stack([qx, px, qy, py])

def bend(x, w, r, kq, ks, l, n=50):

(qx, px, qy, py), (w, ), r, kq, ks, l = x, w, r, kq, ks, l/(2.0*n)